The mounting pressure on software engineering teams to deliver more with less has created a perfect storm for AI adoption. 95% of developers are already embracing AI coding assistants, unlocking newfound efficiencies. But with only 30% of the delivery cycle spent on coding, organizations are also looking to leverage AI for improvements for other tasks in the software delivery cycle.

Harnessing AI from feature inception to operations promises improvements in efficiency, quality and speed. But while the potential is vast and evolving rapidly, the tooling landscape is still in its early days. For leaders, it’s challenging to know where to begin and how to invest.

This article will provide a blueprint for how to think about tools for AI assistance in a software engineering organization; it’s intended as a mental model for keeping up with a fast-moving and changing market.

No one tool to rule them all

Clients often ask us, “Which AI tool should we use for software delivery tasks other than coding?” The answer is that there’s no single tool, and that’s not just because the market is still evolving. There will never be a one-size-fits-all solution because generative AI (GenAI) assistance should be infused across the delivery toolchain and complement users’ existing tools and workflows.

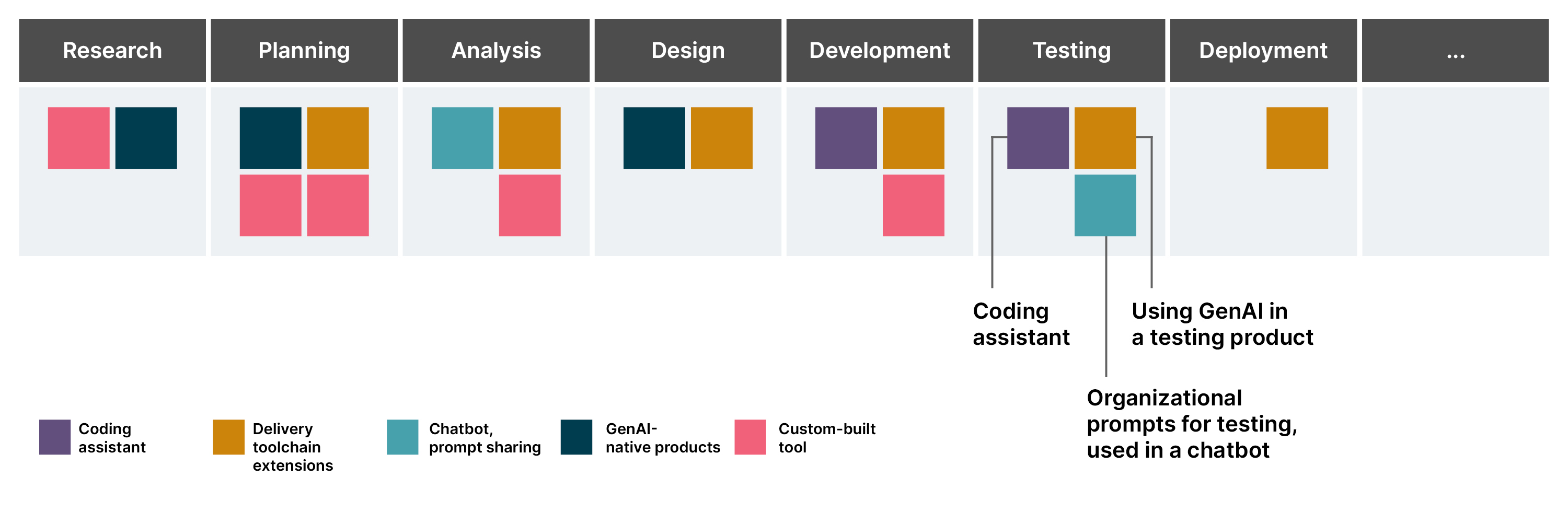

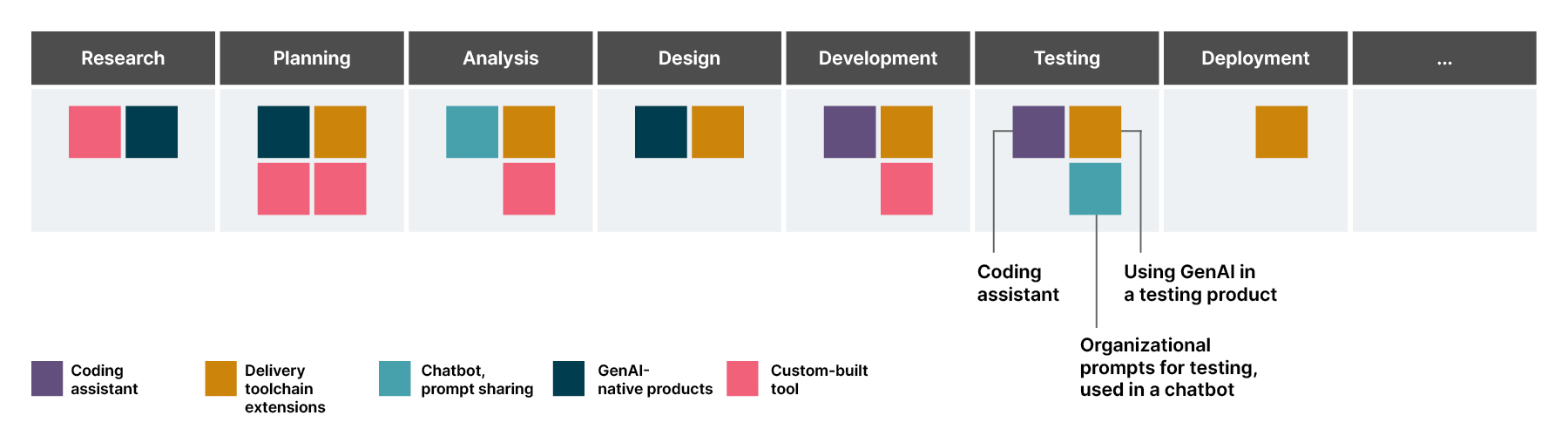

Let’s take testing as an example:

Developers and QAs can use a coding assistant to generate and improve test code, and refine their testing scenarios.

QAs could prompt a chatbot to brainstorm “what can go wrong” scenarios for a go-live.

The vendor of a testing product already used by the organization might enhance its functionality with AI.

Or let’s look at how AI would be used in the design space:

Visual designers can use AI extensions to Figma.

- A developer could generate prototype code from a design or an image with an open source tool.

- A user researcher can use a custom-built prompt in a chatbot to prepare user research interviews, and analyze the results of the user research with an AI-native product like Kraftful.

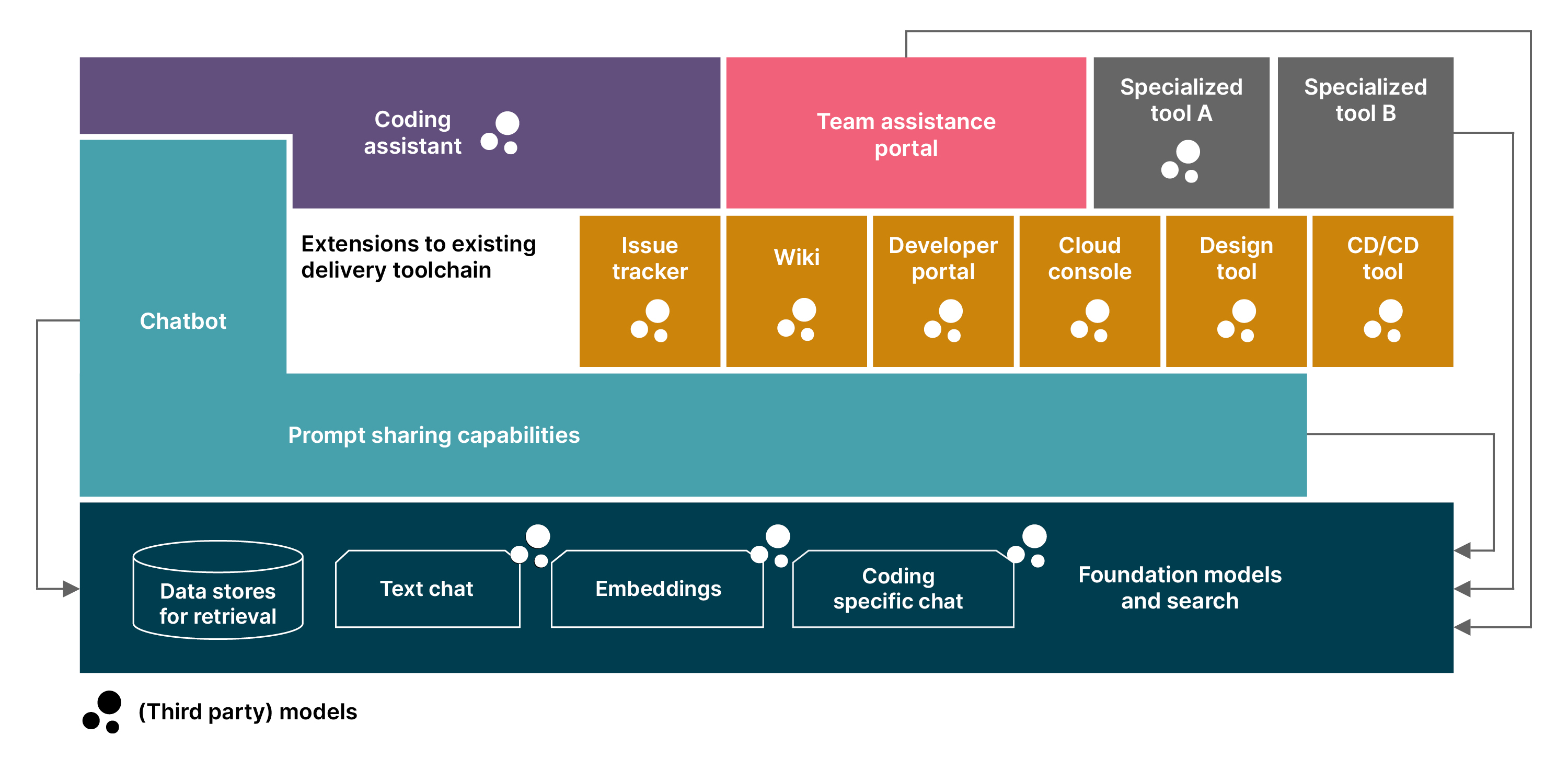

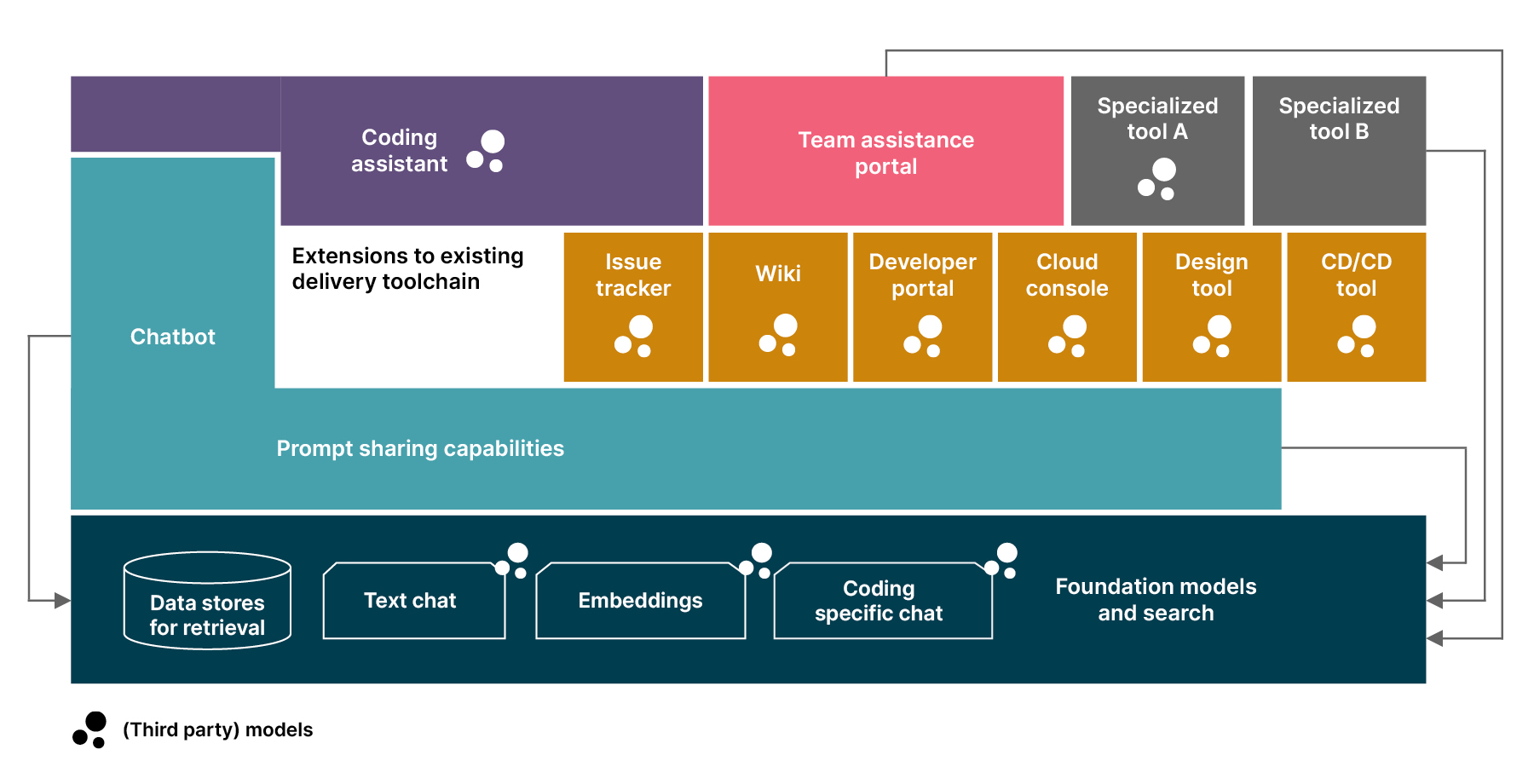

The AI-enabled software engineering stack

Based on what we’re seeing in the market, we’ve identified six key archetypes of tools that form the organizational tooling support options for AI-enabled software engineering.

1) Extensions to the existing delivery toolchain

With surging demand for AI, all of the major software vendors are adding AI-powered extensions to their existing development tools. Examples include Figma, Atlassian’s Jira and Confluence, and the big cloud service providers’ cloud consoles (Amazon Q Developer, Microsoft Copilot for Azure, Gemini for Google Cloud). So, before you consider implementing new tools, focus on how AI could be integrated into the tools your team is already using. These extensions will be an important part of your AI support as they offer the best integration into your team’s existing workflow and into the data the AI needs.

It’s therefore important to stay close to the vendors of your main tools by keeping up with announcements and roadmaps because this is a maturing field. Many of these AI-powered extensions are still in the early stages of development; that means that although this area has a lot of potential, the current functionalities in incumbent products are still limited. Testing will therefore be a critical step in adoption; identify a few people in your team to evaluate new features before implementing them more widely.

2) GenAI-native tools and products

In addition to extensions to the existing delivery toolchain, there are a multitude of new products and open-source tools emerging that have GenAI at the core of their value proposition. Listing some of the products and use cases covered would quickly exceed the space available in this article, but the market really has something for everything at the moment. This includes tasks from requirements engineering, to documentation, to UI design, to LLM-backed ChatOps. However, few tools address larger workflow chunks of the software delivery process — most only focus on particular tasks.

As many of these products are being developed by early-stage startups, careful evaluation is necessary before you share any data with them. It’s also important to look closely at the scope and maturity of their functionality. In the current GenAI gold rush, many are using impressive but shallow demos with little to back them up.

Case study: Thoughtworks helped pave the path for a major US financial services company to leverage AI tools for software development. Just one month into the engagement, we delivered a comprehensive Market Scan report with a configurable roadmap for GenAI initiatives, opening doors to exciting new possibilities.

3) Coding assistants

Among GenAI-native tools, coding assistants stand out as the most established area with the highest potential when looking at AI for software delivery; that’s why they’ve been given their own category in our model. Many of our large enterprise clients are already using AI to assist with coding, and, depending on the context, that usage can lead to up to 10% reduction in story cycle time.

Currently, the most popular product in the space is GitHub Copilot. The recently released Jetbrains AI Assistant is one to watch, as Jetbrains are leaders in providing an excellent developer experience in integrated development environments (IDEs). Alternatives like Tabnine and Codeium stand out for their self-hosting offerings, and training data that alleviates some of the copyleft concerns. AWS and Google have released AWS CodeWhisperer and Gemini Code Assist, which are expected to be particularly good at writing code for the respective cloud stack. Continue is emerging as one of the more popular open-source IDE extensions for coding assistance, with an option to plug the tool into a range of different model offerings, including ones that run on a developer’s machine. And aider is an open-source coding assistant that takes an approach outside of the IDE; it’s currently one of the few tools of this kind that can change code across multiple files.

There are also tools specializing in particular areas of coding assistance. Codium AI, for example, is a product that focuses on test generation. In addition, there’s a whole area of coding assistance tools that focus on navigating and explaining code across a codebase or even many codebases, like Sourcegraph’s Cody, Bloop AI or Driver AI.

Coding assistance with AI is here to stay. While it is already delivering value today, it has the potential for even greater impact, in particular where GenAI is combined with other technologies that can understand and statically analyze code. However, it’s important to note that coding assistants should be paired with effective engineering practices that act as guardrails. While large language models (LLMs) excel at pattern recognition and synthesis, they lack the ability to judge code quality. Instead, they still mostly amplify what they see indiscriminately. And what they can see includes a lot of half-baked public code in their training data, or the bad code in our codebase that we do not want to repeat.

4) Chatbots and prompt sharing capabilities

Let’s face it, your employees are probably already leveraging tools like ChatGPT for their day-to-day work — so the question isn’t if, but how they’re using them. One way to facilitate responsible use is with internal chatbots under your control that allow your teams to tap into the full breadth of what LLMs can do, with built-in guardrails. We’re seeing a range of organizations pilot such internal chatbots at the moment, mostly with custom-built user interfaces (UIs) that either talk to foundation models at the big cloud providers in a monitored way, or even to models hosted by the organization itself.

The key to unlocking the full potential of these deployments hinges on two important factors: upskilling employees in prompting techniques, and giving them the tools to share effective prompts with each other. Platforms like Dify demonstrate what prompt sharing can look like.

Making it easy for people to share prompts is particularly important when using AI to assist software delivery. That’s because software delivery teams share a common domain of prompting. Every team needs to write user stories, craft testing scenarios, make architecture decisions or apply security practices like threat modeling.

Case study: A leading media and publishing company turned to Thoughtworks to optimize their software development process with AI. Using the Thoughtworks Team Assistance Portal, we are conducting experiments across the development lifecycle using AI.

5) Team assistance portals

To recap: many products and tools for software delivery tasks are still in their nascent stages; the incumbent vendors of existing delivery toolchain components are only slowly catching up. At the same time, many are eager to explore the potential of AI in software delivery. In order to learn sooner rather than later, consider deploying a lightweight custom tool that is under your control, and one that your engineering teams can use to learn about AI’s potential.

Experimenting early can inform your planning and investments in the space, and help you better monitor the market’s evolution in a more intentional way. The cost for learning with a custom tool is also relatively low: GenAI as a technology is quite accessible, especially for experimentation. And unlike many other tools used to deliver and run software, GenAI assistance does not lead to vendor lock-in and migration efforts — you can simply stop using one tool, and start using another. Sometimes you can even reuse prompts with other tools, as the interface of all the tools is natural language.

Thoughtworks has an accelerator that can help you set up a team assistance pilot. It codifies good practices and organizational knowledge to assist teams with tasks like product ideation, user story writing, architecture discussions, test data generation or, indeed, anything else suitable for GenAI support. This setup allows you to learn with a tool that is deployed in your environment, under your control, with models provided by a cloud provider you already trust.

6) Foundation models and search

The vendors of delivery toolchain components and GenAI-native products usually provide access to a large language model and search functionality as part of their offering. However, for internal chatbots, open-source tools or custom-built tools like the team assistance portal, organizations need to provide access to (foundation) models and search capabilities for retrieval-augmented generation (RAG) themselves. That is the final component in our mental model.

Looking at this component through the lens of assisting your software delivery teams today, the default option to look at would be the model services provided by your current cloud provider. AWS, Azure and Google Cloud all provide capabilities for simple information retrieval setups to enhance your tooling with some RAG for relevant organizational data. Setting up model access like this yourself also gives you the opportunity to introduce some guardrails, monitoring and analytics.

Looking ahead

The relentless pressure to do more with less — or even the same — isn’t easing up. While many of these tools are still in their early stages, the trajectory of the market suggests rapid development and shifts in software delivery practices and tools. To stay ahead of the curve, organizations need to start familiarizing themselves with AI for software delivery.

Starting small within certain departments or teams can start a gradual, yet manageable, transformation, avoiding the challenges of a hasty, large-scale overhaul later on. Beyond the hype, AI assistance in software is not just a passing trend. By proactively integrating AI tools and cultivating a culture of responsible usage, leaders can ensure their organizations remain competitive and well-prepared for a future where AI is deeply embedded in every aspect of software development.

If you’d like to explore your organization’s potential and readiness to adopt AI across your entire software delivery lifecycle, request our AI-enabled software engineering workshop today.