GDPR: Dude, where’s my data?

By

Published: September 22, 2017

In the first article of this series, we looked at the background the General Data Protection Regulations (GDPR), as well as some its implications. In this follow-up, we’ll concentrate on how to understand all the data in your possession and what that means for your organization. While the deeper question of actual compliance remains an issue for organizations to solve internally, what we’d like to do here is explore ideas that provide a framework for data compliance in various guises.

Picture this scene: A company has a variety of systems, some built from the ground up and changed over the years, some off-the-shelf products that have been integrated with bespoke systems—and in varying states of decay—and, of course, some fully outsourced. Sounding familiar yet?

It’s little wonder that when new compliance regulations come along, with their varying data requirements, the task of getting up to speed can feel akin to moving the office headquarters an inch to the left, brick by brick.

It’s tempting to begin by thinking of constructing an inventory of systems, data flow, permissions as well as geographical information that might be needed. But such a static view of data isn’t sufficient: you need to consider the end-to-end flow of your data. We would like to introduce an iterative approach to work back and forth through your systems to gain understanding in how your systems have evolved over time and pinpoint all the details required to contribute to a GDPR audit. This will bring your systems to life, enabling you to see real functional uses of those systems, which are more useful than static views when mapping data. Only then can we answer the basic questions above.

Let’s consider a retail banking loan application system. There’s a lot of user data to think about multiple back-end systems, asynchronous workflows, manual interventions and so on. Start by considering all the steps required to go from one end, in this case, a customer enquiry, to the other—a loan approval decision. We can create a simplified system representation such as:

Diagrams can help you visualize this. Start with a physical model: get a room and map user journeys through the systems and identify the data items, filtration, validation, storage, and format, at and between each stage. Then step by step, we can start to uncover some mandatory details that GDPR requires: validate ownership, accountability, access and location rules, and constraints. As scenarios are added, a series of electronic diagrams will be required but the clarity obtained by a ‘working wall’ cannot be underestimated.

However, data alone should not really be viewed separately from behavior. If we did that we would just create dry information flows that might miss out areas of the systems we need to audit. Step back and view this from a holistic perspective look at your scenarios: do they represent your business’ products and services, the background tasks and so on?

Privacy impact assessment (PIA) is a process which helps an organization to identify and reduce the privacy risks of a project. An effective PIA will be used throughout the development and implementation of a project, using existing project management processes.

A PIA enables an organization to systematically and thoroughly analyze how a particular project or system will affect the privacy of the individuals involved.

Indeed, under the GDPR, a DPIA (Data Protection Impact Assessment) is legally required. Which is in fact just a PIA.

The key aspects of the DPIA get us closer to answer the simple ‘back to basics’ questions we posed at the start is this article. A DPIA is designed to help us:

So to bring this all together — just where is my data? We’ve suggested a series of context-driven mapping exercises and impact assessments, useful tools no doubt, but they can be put together in a systematic iterative lean model. Let’s travel through your system to and from every endpoint, whether it’s a user screen, batch input, API endpoint and so on.

One common failure with IT systems is known as configuration drift, where small changes, near-term decision-making, and fire-fighting often result in the system irrevocably moving from its last steady state. The same is true for compliance checks. So it’s important to use this approach on an ongoing basis to make subsequent audits easier to take in your stride.

For the greatest success, this model needs to be approached with a transformation mindset. Investigating all the flows, maps and using impact assessments will give you a deeper understanding of your systems; it will also make it obvious where improvements can be made. By asking ‘Dude, where’s my data?’ you might just alight on the perfect opportunity to schedule iterative improvement overall.

Most company leaders realize that transformation is imperative to survival. Yet, many we speak to fail to grasp that transformation itself is unending, change is constant. Testing and learning are the only way to navigate the fast pace of technological and business change. Folks that create plans that describe a desired end state and a series of stages to reach that will find that this is the IT equivalent to the crock of gold at the end of the rainbow. The closer you get the further it moves away.

Plan for change. Not destinations.

Picture this scene: A company has a variety of systems, some built from the ground up and changed over the years, some off-the-shelf products that have been integrated with bespoke systems—and in varying states of decay—and, of course, some fully outsourced. Sounding familiar yet?

It’s little wonder that when new compliance regulations come along, with their varying data requirements, the task of getting up to speed can feel akin to moving the office headquarters an inch to the left, brick by brick.

Getting back to basics

It feels like understanding the scope and scale of the problem is a good place to begin; so let’s start with some seemingly simple questions and then discuss the ways in which we can construct a testing toolkit to help us answer them:- What data do we have?

- Where is it?

- Who accesses it?

- Why do they access it?

It’s tempting to begin by thinking of constructing an inventory of systems, data flow, permissions as well as geographical information that might be needed. But such a static view of data isn’t sufficient: you need to consider the end-to-end flow of your data. We would like to introduce an iterative approach to work back and forth through your systems to gain understanding in how your systems have evolved over time and pinpoint all the details required to contribute to a GDPR audit. This will bring your systems to life, enabling you to see real functional uses of those systems, which are more useful than static views when mapping data. Only then can we answer the basic questions above.

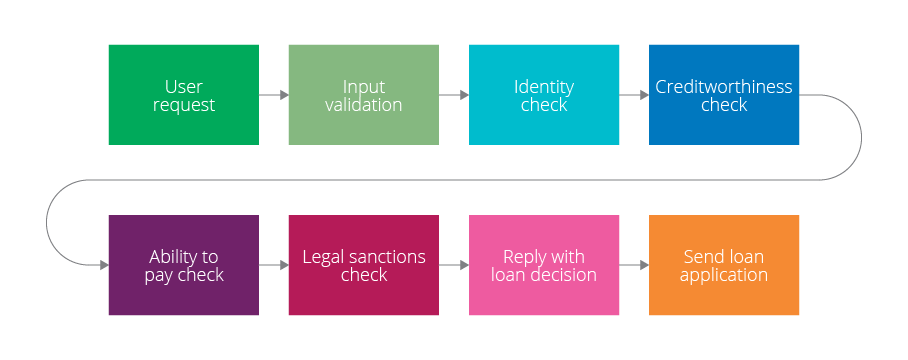

To know where you are going, you need a map

So what is data mapping? By simply tracking every data through the interconnected morass of your systems—both manual and electronic—you might be in a position to answer the questions we listed above. But this approach risks ignoring context. We think it’s better to identify a series of customer scenarios which can be used to construct an information map, enabling you to trace data flows throughout your systems.Let’s consider a retail banking loan application system. There’s a lot of user data to think about multiple back-end systems, asynchronous workflows, manual interventions and so on. Start by considering all the steps required to go from one end, in this case, a customer enquiry, to the other—a loan approval decision. We can create a simplified system representation such as:

Figure 1: A typical loan-approval process

It is important to model the behavior of the system and not just check data in motion or at rest. This ensures we identify every flow in all aspects of the systems we are checking.Diagrams can help you visualize this. Start with a physical model: get a room and map user journeys through the systems and identify the data items, filtration, validation, storage, and format, at and between each stage. Then step by step, we can start to uncover some mandatory details that GDPR requires: validate ownership, accountability, access and location rules, and constraints. As scenarios are added, a series of electronic diagrams will be required but the clarity obtained by a ‘working wall’ cannot be underestimated.

However, data alone should not really be viewed separately from behavior. If we did that we would just create dry information flows that might miss out areas of the systems we need to audit. Step back and view this from a holistic perspective look at your scenarios: do they represent your business’ products and services, the background tasks and so on?

From mappings to impact assessments

The flow above leads us nicely to a tool that data controllers are encouraged to produce: Privacy Impact Assessments or PIAs. In the words of the of the Information Commissioner’s Office (ICO):Privacy impact assessment (PIA) is a process which helps an organization to identify and reduce the privacy risks of a project. An effective PIA will be used throughout the development and implementation of a project, using existing project management processes.

A PIA enables an organization to systematically and thoroughly analyze how a particular project or system will affect the privacy of the individuals involved.

Indeed, under the GDPR, a DPIA (Data Protection Impact Assessment) is legally required. Which is in fact just a PIA.

The key aspects of the DPIA get us closer to answer the simple ‘back to basics’ questions we posed at the start is this article. A DPIA is designed to help us:

- Identify the types of personal information that will be collected

- Specify how it is collected, used, transmitted and stored

- How and why it can be shared and to whom

- How it is protected from inappropriate disclosure at stage of use

A three-staged approach

So to bring this all together — just where is my data? We’ve suggested a series of context-driven mapping exercises and impact assessments, useful tools no doubt, but they can be put together in a systematic iterative lean model. Let’s travel through your system to and from every endpoint, whether it’s a user screen, batch input, API endpoint and so on.

- Create representative end-to-end behavior flow for a journey

- Create information maps that show the data ownership, accountability, access, location, storage and transport requirements

- Conduct a PIA for every step of the journey

One common failure with IT systems is known as configuration drift, where small changes, near-term decision-making, and fire-fighting often result in the system irrevocably moving from its last steady state. The same is true for compliance checks. So it’s important to use this approach on an ongoing basis to make subsequent audits easier to take in your stride.

For the greatest success, this model needs to be approached with a transformation mindset. Investigating all the flows, maps and using impact assessments will give you a deeper understanding of your systems; it will also make it obvious where improvements can be made. By asking ‘Dude, where’s my data?’ you might just alight on the perfect opportunity to schedule iterative improvement overall.

Most company leaders realize that transformation is imperative to survival. Yet, many we speak to fail to grasp that transformation itself is unending, change is constant. Testing and learning are the only way to navigate the fast pace of technological and business change. Folks that create plans that describe a desired end state and a series of stages to reach that will find that this is the IT equivalent to the crock of gold at the end of the rainbow. The closer you get the further it moves away.

Plan for change. Not destinations.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.