Generative AI is all the hype these days. Within seconds, you can have your question answered, generate a realistic picture or write a dissertation. Software engineering is also being influenced by these developments. With tools such as ChatGPT or Github Copilot, you can generate code, data and tests. But how useful is the generated code? Can it be shipped to production? Are the tests actually testing something? Is the data valid? Those questions are on the minds of many people in the industry.

Github Copilot is one of the tools powered by GenAI that shows a lot of potential. It is developed by Github and powered by OpenAI Codex under Microsoft's umbrella.

In this series of articles, I will give my impressions on Copilot, focusing on the practical side of things. I will ignore for now the legal implications, the impact on younger developers and other controversial topics. My goal is to see how helpful a tool it can become and how to use it to its maximum potential. I will also ignore Copilot Chat for the simple reason that it was not publicly released when I made the experiments (August 2023). The goal here being to build an understanding of the value (or lack thereof) of Copilot and share my experience to my fellow developers.

I will describe my first time playing with Copilot, presenting three use cases, each time explaining what was the context, the objective of the experiment and what conclusion did I draw from the experiment. The language of choice will be Go, as I was recently working with it and am feeling quite comfortable with it. I will use VSCode and the official Copilot plugin provided by Github. VSCode is also configured to automatically format the code and fix the imports on save. Though Copilot could be used for the latter, it is just easier to let the IDE do this.

In my experiments, I will use variations of zero-shot prompting and chain-of-thought prompting. For example, I can write a comment asking for Copilot to generate a function (zero-shot) or write a comment within a function asking to generate the next code block (chain-of-thought).

Throughout my experiments, I did some background research. As GenAI was new to me, I felt it was more than necessary. The Youtube channel from Visual Studio Code gave me some pointers on how to start. A beginner's guide to prompt engineering gave me some context and helped me clarify the terminology. How to use Copilot showed me what to expect in the IDE and gave some good tips.

Experiment one: Let’s give it a try!

I started a new toy project to build a simple web application to retrieve information about movies and actors. One of the strengths of Go is you do not need any complex framework to build a web app, unlike other languages (I’m looking at you Java and JavaScript!). However, to keep things interesting, I decided to use a couple of libraries.

For this small project, I knew exactly what I wanted. I would have been able to write everything with minimal amount of Googling just to refresh on the exact syntax. The point of this experience was to see how Copilot works and experiment with prompting.

Requirements:

Command line application using Cobra

Web server using Echo

In-memory database, JSON file loaded into Go structs

Four endpoints to get movies, actors, actors from a movie and movies from an actor

IMDB numbers must be valid

As this was my first time ever playing with Copilot and GenAI programming, I decided to go with the flow. I started typing code or comments and observing what was suggested. I then accepted everything with the tab key or started again.

Starting up the server

I used a comment to explain that I wanted to start a server with the Echo library. After typing the beginning of the function signature, Copilot was able to suggest the correct code, as illustrated in the following image (in gray, the suggested code). I wasn’t interested in the default endpoint so I removed it.

From this point, it was easy to add the usual middlewares. I started typing the code to do so and after just three characters Copilot suggested the correct code. Copilot was then suggesting the next line correctly after only starting a new line. This is illustrated in the following two images.

Adding a health check endpoint worked the same. Later adding the other endpoints was also automatic. Copilot was able to identify the handler functions and infer the correct endpoint names from the handler’s names.

The same approach (one simple comment and begin typing) worked similarly to start the command line application with Cobra.

Implementing the business logic

Writing the code to access the in-memory database and the various endpoints was similar. I used a top level comment to give details (e.g. “get the actor with the given id”) then I started typing code. It generated a one line function returning a default value. I typed the first characters of a for-loop and Copilot generated the code to find the actor in the list. Perfect! Error handling was missing so I added that, using Copilot to suggest code after typing the first characters. I then started typing the function signature to get the movie with a given id. Copilot correctly suggested the code for the whole function in one go.

Generating data

Data generation was a weakness in this experiment. At first it was okay to generate a list of actors with valid IMDB numbers. Though often the ids generated did not match the actual IMDB number of an actor. I had to double check on imdb.com to correct it (yes, I am that pedantic!). This became more of a problem when generating test data. Copilot was able to generate data that looked correct, but was not matching the hard-coded data (i.e. non existing ids or names). It always looked fine, but also always required fixing. The problem persisted when generating a JSON file to hold the same data. Copilot was not able to suggest the correct data, despite the Go file with the hard-coded values being open. Again, it needed manual editing to fix it, one line at a time.

Unit testing the code

On the subject of unit testing, Copilot was good at generating test code. In this experiment I went for a test-last approach. Copilot had no problem generating tests for existing functions. After naming the test and having the IDE complete the function signature, I started typing code to generate a list of test cases and copilot was often suggesting the correct fields, populating with what appeared to be correct data (c.f. previous point) and generating sub-tests, as is appropriate in Go. The first test in the project was generated using default error checking, which is quite verbose. I prefer using assertions from the testify library. Specifying this in a comment, or prompting the import myself, was enough to tell Copilot what I wanted. I deleted the code and waited for Copilot’s suggestion. It generated what I was expecting.

Conclusion

This first experiment was quite interesting! There was a lot of trial and error. It was captivating to see what Copilot would suggest as I was typing code.

In a new project or feature there is no context (that is to say, there’s no existing code or comments to guide the prompt). Therefore, code generation is minimal. For example, when defining a function it often suggested a one line implementation returning default values. Copilot started being sensible when I started typing code. With the context from comments (e.g. use this library), it was able to suggest meaningful code.

Where Copilot really shone was when generating a function similar to an existing one. For example, writing the function to get a movie from the database only required typing the function name. This is because the code is almost identical to the function to get an actor. Copilot could infer the difference from the name of the function itself. This is where the speed-up really occurs. The same applies to test code. In the same way, Copilot is also great at generating boiler plate code (e.g. instantiate a simple server, filter a list, add an endpoint).

In the end, reviewing the generated code is what takes the most time. This can become tedious and lead to fatigue. You have to understand what you want well enough to critically evaluate the suggestions. For example, when generating the web server, Copilot added a default endpoint. If I had not removed it, that would have been superfluous but acceptable — in production code, that could have led to a security issue.

Key take-aways:

Going with the flow was quite enjoyable: Seeing suggestions as I was typing and accepting/discarding the whole lot works well to generate boilerplate code.

Use top level comments to give context: This is particularly useful when starting a new file.

Choose your next word carefully: Naming variables and functions does contribute to the context.

Experiment two: Trying to be smart

This experiment returns to the first experiment, with a twist! I now have a better understanding of how Copilot works, both from my own experiments and online research. The challenge here is to have Copilot generate the maximum amount of correct code in one go. For example, generating an entire function correctly, without having to edit the code or prompt more inside the function. The goal of this experiment is to try to outsmart Copilot.

To do this I began by looking at more suggestions than the first one. The VSCode plugin has two features for this: either using the mouse to select another suggestion, or opening the Copilot UI to see up to 10 suggestions.

Requirements:

Same as experiment one

Typing as little code as possible (prompt efficiently)

Test coverage should be close to 100%

For this experiment I created a dedicated repository. I left the comments used for prompting and context, to illustrate how I communicated with Copilot.

Starting up the server

I used an iterative approach to generate the whole function in one go. Starting with a simple comment (the first line in the example) which produced incomplete code (very similar to experiment one). I then mentioned middlewares which were then added to the suggestion. The endpoint took a few iterations to arrive at the desired outcome. The first suggestions called a non-existing function; I wanted an inline closure. Lastly, I asked for the address to be passed. The final prompt and suggested answer is illustrated in the following image.

This is a successful attempt at generating everything. The prompt was informative, giving enough direction and context. The generated code can be accepted as is.

Validate IMDB ids

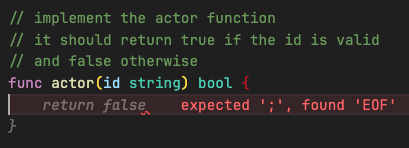

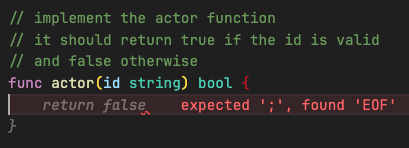

I started writing tests first. I put a top level comment stating the purpose of the package and then started typing the function name. Copilot suggested nothing, so I started typing the first character of the test cases. Copilot then helped complete the struct and generate test cases. All cases were meaningful to a point. I tried multiple times. Sometimes it started looping on a given case, sometimes looping back from the start. This is shown in the following image. Interestingly, the suggested examples were different from experiment 1, though still covering the same cases.

Copilot then suggested a correct test statement, which I asked to rewrite with the Testify library, similar to experiment one. From there I prompted Copilot for the implementation. Different prompts all resulted in Copilot suggesting a default value. When typing the first characters, it then correctly suggested partial implementation. Iterating from here allowed me to generate the entire function.

At this point I observed a problem with relying too much on Copilot. I ran test coverage and saw that one path was not tested. There are two things to note here. First, we are back to the data issue: Copilot generated many valid test cases. One was missing and I didn’t notice it. Second, that didn’t prevent Copilot from generating the correct implementation. This is where correctly doing TDD (i.e. red-green-refactor, one case at a time) would have helped. Trying to get Copilot to do everything in one go tripped me. Perhaps a Copilot Chat conversation might have helped in this situation.

Moving on to validating a movie id was straightforward. Copilot was able to duplicate tests and implementation from only starting to name the functions. As the implementations for actor and movie were almost 100% identical, I asked Copilot to extract the common code to another function, correctly adding the prefix parameter.

Refactoring the two functions was as easy as deleting the bodies and waiting for Copilot to suggest the replacement.

One step further

Before closing the experiment, I wanted to try something new. I wanted to generate a function to fetch all actors from the IMDB page of a movie. I previously tried that myself, but having no experience in web scraping this led nowhere. Playing with Copilot in that previous project also led nowhere.

I decided to give it another try in this project, without any hope. I created a new file, writing a comment explaining what I wanted and mentioning the library to use. Copilot generated a function. It looked different than my previous attempts. I ran the function. To my surprise, it worked! It generated a list of actor ids. I went to imdb.com to double check and the output was indeed correct.

This experiment is illustrated in this commit.

Conclusion

Trying to get Copilot to generate entire slices of code is quite fun! It felt very rewarding when I got the expected result. However, it requires a lot of effort. Sometimes typing as many comments as the amount of code to be generated with a lot of trial and error. Sometimes it just didn’t work and required manual editing at best, or starting the implementation myself at worst.

This approach might work in case of an exported function for which you would write the documentation first, but even then I don’t think it would be a silver bullet. And it would not apply to non exported private functions. And it requires knowing exactly what the function will do before starting. A lot of pre-conditions!

As mentioned in the previous experiment, reviewing the generated suggestion before being able to make a decision is exhausting. Even more so in this experiment, as the suggestions were much larger (whole functions vs. one block).

Understanding how to prompt and how to communicate with the tool will make a significant impact when trying to achieve this. As I was only learning, I did not have this experience.

Key take-aways:

It requires a lot of effort

It is rewarding when it works

More time consuming than going with the flow

Goes against TDD principles

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.