Modernizing your build pipelines

Published: November 05, 2018

Doing Continuous Integration is a lot easier if you have the right tools. In our project at a german car manufacturer, we were tasked with developing new services and bringing them to the cloud. We had a centralized Jenkins instance, shared by all the teams in the department. It didn’t fit our needs and made it harder for us to deliver software quickly and reliably. In this article we’ll explain how we migrated to a new solution and recreated all of our build pipelines, and explore what we learned from our experience.

The problems with the clients existing Jenkins instance included:

We researched alternatives based on these criteria. We started with tools familiar to the team, such as ConcourseCI, TravisCI, CircleCI, GoCD, and Jenkins 2.0.

All of them offered what we were looking for, but in this particular case, we opted against suggesting GoCD — which is developed by Thoughtworks. We’re actually big fans of GoCD, but when we’re working with new clients, we sometimes choose not to recommend our own products, in case they’re worried about bias.

We spiked some of the tools in the team and wrote down our findings. In the end, we decided to use Concourse. We liked the support for containers, the simplicity of the UI and that the pipeline was fully defined through a YAML file. However, we believe that the lessons we’ve learned can be applied to any modern CI tool.

That only defines what the pipeline does. But what makes a pipeline more useful than another?

Fast pipelines are crucial. You want to encourage your developers to push their code early and often. That can only happen if they can get feedback on their changes quickly.

Parallelization reduces the build time. Ideally, all the tasks that aren’t dependent on each other should run in parallel. The CI should do that transparently for you.

Quick feedback isn’t only achieved with speed. Tasks that fail randomly can kill the feedback loop. This was a problem for us, as we often had to trigger unreliable tasks over and over until they worked.

In our experience, the best way to ensure reliable builds is to use containers to run each task in isolation. Keeping a persistent workspace across builds, as Jenkins does, can save you time but it’s a recipe for flakiness.

You need to create the containers that are used in the pipeline, with the binaries and packages for all the defined steps. We prefer building them as part of the pipeline itself. Even if they’re not production containers, you should still follow best practices to build them. There are plenty of articles available on how to write high-quality images.

Getting this to work can be very challenging, as it requires running Docker in Docker. We have an example that you can use if you want to do this in Concourse. Use it with this entry point so that permissions are set properly.

Using containers helps with reliability and reproducibility, but it can hinder the speed of your pipeline because you have to repeat steps like downloading dependencies and running build scripts multiple times. Caching dependencies, such as npm packages, is an acceptable tradeoff.

As your pipelines grow, and as you add new pipelines, complexity grows. Our old pipelines had so much copy-pasted code that changing anything took a lot of effort.

One way to avoid duplication is to parametrize tasks. You can write the definition of a task in a file, and use it from the pipeline. It is configured by passing variables to it. We use this extensively. We have different linters that we want to run as separate tasks, so we built a generic linter task, such as:

Then, we can integrate this into a pipeline like this:

We have a library of tasks to reuse code across pipelines. It works well because of the effort we made in keeping the pipelines consistent. Some tasks, like updating the pipeline, almost never change and can be freely reused.

We try to avoid over-abstracting, however. Sometimes is better to just have two different tasks and reduce the coupling between them.

The same applies to the containers where the tasks are executed. You have to balance between trying to reuse containers and coupling different pipelines through these images. One container that we reuse is the one that runs the ServerSpec tests.

Consistency also helps with visualization. The effort we spent building shared tasks has made the pipelines more similar to each other.

Lastly, having a dashboard for your pipelines gives the team one place to check everything’s working correctly, and it serves as an information radiator.

In order to avoid replacing one snowflake with another, we used Infrastructure as Code from the beginning. Specifically, we use Terraform to provision all the infrastructure located on AWS.

We set it up on EC2 instances, using AutoScaling groups. For the database, we took Amazon RDS because we did not want to set it up ourselves.

We hypothesized that this investment would help us deliver software faster while spending less time maintaining and debugging pipelines. How did it work in practice?

Building everything in isolated containers makes for safe and predictable builds. We know exactly which version of which binary is being used in each step. The containers themselves are tested as well as part of the pipeline.

Thanks to our effort in reusability, creating new pipelines is a breeze. Refactorings are easier to apply and also happen more often. The new pipelines are a lot easier to debug as well.

Switching tools takes time getting used to. Jenkins can restart a job from scratch, whereas Concourse needs a new version of a resource to be there. One big drawback of Concourse is that you cannot pass any artifact between jobs, which forces you to persist anything that you want to keep in external storage, such as S3. This makes working with intermediate artifacts quite inconvenient.

We like the dashboard quite a lot, particularly now that it’s the default page, starting from Concourse 4. However, we do miss support for CCMenu. Tasks that are triggered manually aren’t easy to visualize in the pipeline, and you cannot see who triggered certain builds.

Upgrading from Concourse 3 to Concourse 4 was more painful than expected. The database schema changed in between the two releases. In the end, we ended up spinning up a new instance and discarding all the build history. On the other hand, being responsible for our own tool has encouraged us to be more proactive in keeping it up to date.

If you want to check more details, we have prepared a repository with some sample code that can be used as a starting point.

The problems with the clients existing Jenkins instance included:

- It was a snowflake, which meant that understanding its configuration was hard. Making changes, or recreating it would have been very time-consuming.

- Understanding the legacy code was difficult, changing it even more so. That’s because an internally developed domain-specific language (DSL) to write pipelines as code had been used to write most of the build pipelines

- Maintenance had been sparse and ad hoc, because it wasn’t owned by any team

Making a choice

We wanted to make this process as transparent and objective as possible. These were our requirements for a new tool:- Full configuration through code. Manual changes decrease maintainability. We want to reflect every change in version control

- Pipelines as first class citizens. Build pipelines are a crucial part of our infrastructure, and we want the best possible support from our tool

- Container friendly. We want to make every step in the pipeline reproducible. For that we want to use freshly built Docker containers for all our steps

- Visualization. The status of the build should be easy to check at any time, as pipelines are checked much more often than they are written

- Support. Active community, regular releases

We researched alternatives based on these criteria. We started with tools familiar to the team, such as ConcourseCI, TravisCI, CircleCI, GoCD, and Jenkins 2.0.

All of them offered what we were looking for, but in this particular case, we opted against suggesting GoCD — which is developed by Thoughtworks. We’re actually big fans of GoCD, but when we’re working with new clients, we sometimes choose not to recommend our own products, in case they’re worried about bias.

We spiked some of the tools in the team and wrote down our findings. In the end, we decided to use Concourse. We liked the support for containers, the simplicity of the UI and that the pipeline was fully defined through a YAML file. However, we believe that the lessons we’ve learned can be applied to any modern CI tool.

Building high quality pipelines

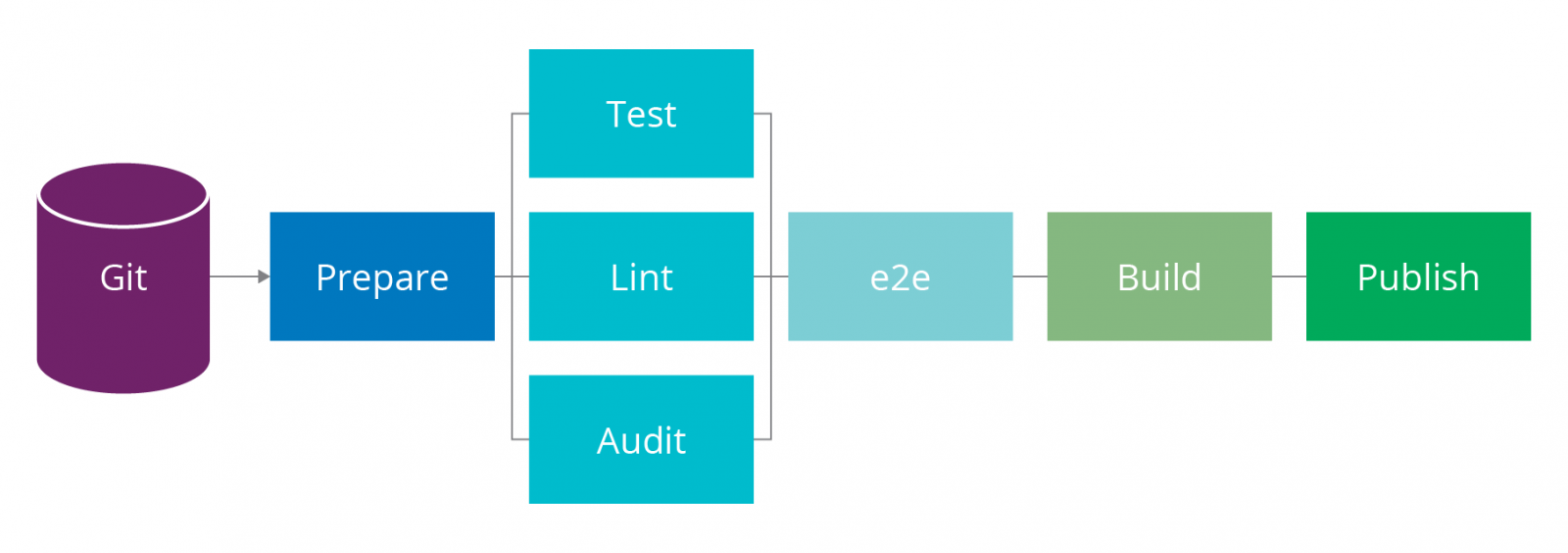

Our pipelines have a bunch of responsibilities. For a frontend application these include:- Multiple linters, for TypeScript, CSS, Shell Scripts, Terraform and Docker

- Unit tests, both for JavaScript and for Shell Scripts

- Dependency checks with npm audit

- Applying infrastructure changes

- End to end tests

- Building the application and deploying it to a CDN

Figure 1: Building pipelines

That only defines what the pipeline does. But what makes a pipeline more useful than another?

- It’s fast. Quick feedback helps you react faster

- It’s reliable. Having to run a flaky build over and over is extremely frustrating

- It’s maintainable. A pipeline should be maintained without too much effort

- It’s scalable. Usually there are multiple pipelines to maintain

- It’s visual. Dependencies are easy to understand and a failed build can be traced back quickly

Fast pipelines are crucial. You want to encourage your developers to push their code early and often. That can only happen if they can get feedback on their changes quickly.

Parallelization reduces the build time. Ideally, all the tasks that aren’t dependent on each other should run in parallel. The CI should do that transparently for you.

- aggregate:

- put: dev-container

params:

<< : *docker-params

build: git

dockerfile: git/Dockerfile.build

- put: serverspec-container

params:

<< : *docker-params

build: git/serverspec

dockerfile: git/serverspec/Dockerfile.serverspec

Figure 2: Building multiple images in parallel

Quick feedback isn’t only achieved with speed. Tasks that fail randomly can kill the feedback loop. This was a problem for us, as we often had to trigger unreliable tasks over and over until they worked.

In our experience, the best way to ensure reliable builds is to use containers to run each task in isolation. Keeping a persistent workspace across builds, as Jenkins does, can save you time but it’s a recipe for flakiness.

You need to create the containers that are used in the pipeline, with the binaries and packages for all the defined steps. We prefer building them as part of the pipeline itself. Even if they’re not production containers, you should still follow best practices to build them. There are plenty of articles available on how to write high-quality images.

FROM node:10.11-stretch

ENV CONCOURSE_SHA1='f397d4f516c0bd7e1c854ff6ea6d0b5bf9683750'

CONCOURSE_VERSION='3.14.1'

HADOLINT_VERSION='v1.10.4'

HADOLINT_SHA256='66815d142f0ed9b0ea1120e6d27142283116bf26'

SHELL ["/bin/bash", "-o", "pipefail", "-c"]

RUN apt-get update &&

apt-get -y install --no-install-recommends sudo curl shellcheck &&

curl -Lk "https://github.com/concourse/concourse/releases/download/v${CONCOURSE_VERSION}/fly_linux_amd64" -o /usr/bin/fly &&

echo "${CONCOURSE_SHA1} /usr/bin/fly" | sha1sum -c - &&

chmod +x /usr/bin/fly &&

curl -Lk "https://github.com/hadolint/hadolint/releases/download/${HADOLINT_VERSION}/hadolint-Linux-x86_64" -o /usr/bin/hadolint &&

echo "${HADOLINT_SHA256} /usr/bin/hadolint" | sha1sum -c - &&

chmod +x /usr/bin/hadolint &&

apt-get clean &&

rm -rf /var/lib/apt/lists/*

Figure 2: a container to build JavaScript applications

Testing containers

We always use ServerSpec to test our containers following a TDD approach. This applies both to the containers that are only used in the pipeline, as well as to the containers that will run on production.require_relative 'spec_helper'

describe 'dev-container' do

describe 'node' do

describe file('/usr/local/bin/node') do

it { is_expected.to be_executable }

end

[

[:node, /10.4.1/],

[:npm, /6.1.0/]

].each do |executable, version|

describe command("#{executable} -v") do

its(:stdout) { is_expected.to match(version) }

end

end

describe command('npm doctor') do

its(:exit_status) { is_expected.to eq 0 }

end

end

describe 'shell' do

%i[shellcheck].each do |executable|

describe file("/usr/bin/#{executable}") do

it { is_expected.to be_executable }

end

end

end

end

Figure 3: testing that the development container has the right versions]

Getting this to work can be very challenging, as it requires running Docker in Docker. We have an example that you can use if you want to do this in Concourse. Use it with this entry point so that permissions are set properly.

platform: linux inputs: - name: git run: path: bash dir: git/serverspec args: - -c - ./entrypoint.sh ./run

Using containers helps with reliability and reproducibility, but it can hinder the speed of your pipeline because you have to repeat steps like downloading dependencies and running build scripts multiple times. Caching dependencies, such as npm packages, is an acceptable tradeoff.

Low maintenance and scalability

Having the pipelines reflected in code helps to keep the maintenance cost low. Our pipelines mostly call shell scripts that also work locally. This allows us to test the steps before pushing, which allows for a faster feedback loop. In many Thoughtworks projects, it is common to find a go script for this, as explained in more detail in this article.As your pipelines grow, and as you add new pipelines, complexity grows. Our old pipelines had so much copy-pasted code that changing anything took a lot of effort.

One way to avoid duplication is to parametrize tasks. You can write the definition of a task in a file, and use it from the pipeline. It is configured by passing variables to it. We use this extensively. We have different linters that we want to run as separate tasks, so we built a generic linter task, such as:

platform: linux

inputs:

- name: git

caches:

- path: git/node_modules

run:

path: sh

dir: git

args:

- -exc

- |

npm i

./go linter-${TARGET}Then, we can integrate this into a pipeline like this:

- task: lint-sh

image: dev-container

params:

<< : *common-params

TARGET: sh

file: git/pipeline/tasks/linter.ymlWe have a library of tasks to reuse code across pipelines. It works well because of the effort we made in keeping the pipelines consistent. Some tasks, like updating the pipeline, almost never change and can be freely reused.

We try to avoid over-abstracting, however. Sometimes is better to just have two different tasks and reduce the coupling between them.

The same applies to the containers where the tasks are executed. You have to balance between trying to reuse containers and coupling different pipelines through these images. One container that we reuse is the one that runs the ServerSpec tests.

Visualization

One antipattern that we saw in our old pipelines was running multiple checks together in the same step. That forces you to read through multiple logs to find what failed. Instead, we clearly divide the steps, which helps identify errors more quickly.

Consistency also helps with visualization. The effort we spent building shared tasks has made the pipelines more similar to each other.

Lastly, having a dashboard for your pipelines gives the team one place to check everything’s working correctly, and it serves as an information radiator.

How it all fits together

At the start of our journey, we decided to recreate our build pipelines and to switch to a new CI tool, operated by the team.In order to avoid replacing one snowflake with another, we used Infrastructure as Code from the beginning. Specifically, we use Terraform to provision all the infrastructure located on AWS.

We set it up on EC2 instances, using AutoScaling groups. For the database, we took Amazon RDS because we did not want to set it up ourselves.

We hypothesized that this investment would help us deliver software faster while spending less time maintaining and debugging pipelines. How did it work in practice?

The good

Being code driven means that our infrastructure is always in a known state. Owning the solution allows us to adapt it to our needs. One such case was replacing PhantomJS, which is no longer actively developed, with Headless Chrome to run frontend tests.Building everything in isolated containers makes for safe and predictable builds. We know exactly which version of which binary is being used in each step. The containers themselves are tested as well as part of the pipeline.

Thanks to our effort in reusability, creating new pipelines is a breeze. Refactorings are easier to apply and also happen more often. The new pipelines are a lot easier to debug as well.

The bad

The thing we liked the least about Concourse is the documentation. It’s pretty thin in many places. Caching, for example, isn’t explained particularly well.Switching tools takes time getting used to. Jenkins can restart a job from scratch, whereas Concourse needs a new version of a resource to be there. One big drawback of Concourse is that you cannot pass any artifact between jobs, which forces you to persist anything that you want to keep in external storage, such as S3. This makes working with intermediate artifacts quite inconvenient.

We like the dashboard quite a lot, particularly now that it’s the default page, starting from Concourse 4. However, we do miss support for CCMenu. Tasks that are triggered manually aren’t easy to visualize in the pipeline, and you cannot see who triggered certain builds.

The ugly

Operating infrastructure needs more effort for the team. Concourse is a lot more complex to set up than Jenkins, which basically only needs a war file. You can see the complexity of the architecture in the official page. We’ve had downtimes related to the CI Infrastructure that, while serving as a learning experience, have impacted our productivity.Upgrading from Concourse 3 to Concourse 4 was more painful than expected. The database schema changed in between the two releases. In the end, we ended up spinning up a new instance and discarding all the build history. On the other hand, being responsible for our own tool has encouraged us to be more proactive in keeping it up to date.

Conclusion

All in all, the positives greatly outweigh the negatives. We’ve reached a state where creating new pipelines for new services and infrastructure can be done quickly and reliably. We’re a lot happier with the state of our build infrastructure than we were before.If you want to check more details, we have prepared a repository with some sample code that can be used as a starting point.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.