Data products are increasingly seen as a clear differentiator and competitive advantage in the modern business landscape. However, businesses encounter many challenges and complexity in leveraging and operationalising their data assets. Driven by the scalability and cost-effectiveness of cloud data warehouses/lakes, the modern data stack is a suite of tools and patterns that have emerged to address these challenges and lower the barrier for data integration.

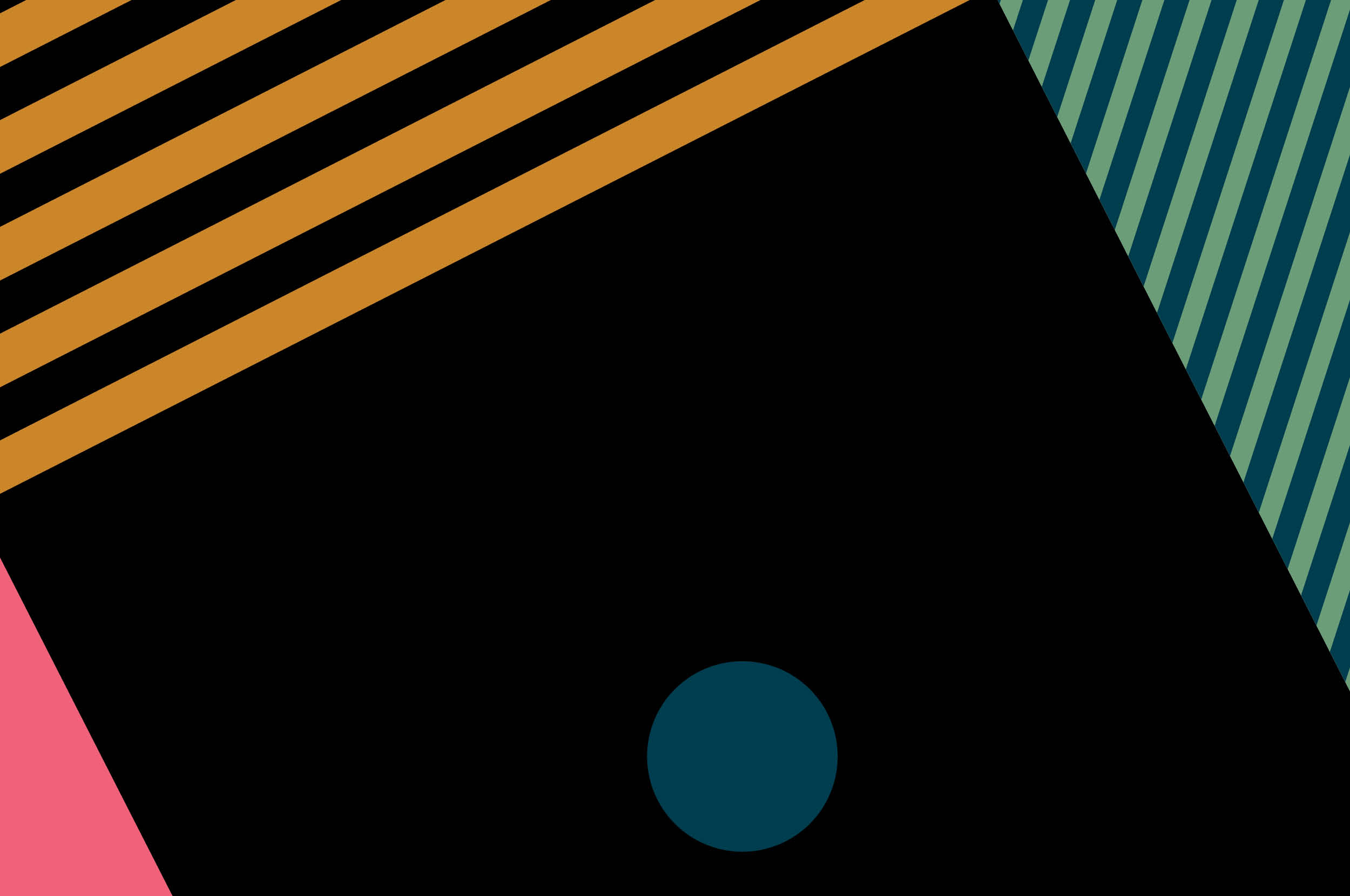

At a macro level, four high-level stages facilitate the journey of data from source data systems to data products in the modern data stack: ingestion, storage, transformation and publication (see the figure below). With a rapidly growing ecosystem of off-the-shelf tools and services at each stage, businesses can now pick and choose those that meet their requirements and drastically reduce the need to build custom bespoke solutions. The benefits are fewer custom components to develop and maintain, lower cost, and faster time to production for data products.

Beyond the high-level stages, several critical DataOps practices and tools underpin successful implementations of the modern data stack, including:

Modern engineering practices like automated build and deployment pipelines, infrastructure as code, CI/CD and trunk-based development.

Data flow orchestration tools (e.g., Apache Airflow, Prefect and Dagster).

Metadata management tools, including data catalogs, schema registries, and data lineage tools.

Governance and security practices and tools.

Data observability practices and tools.

An interesting open question is how the modern data stack and the data mesh paradigm relate. While they could be construed as opposing visions for a data platform (i.e., centralisation vs. decentralisation of data assets and ownership), the core data mesh principles are largely compatible and, in many cases, complementary to the modern data stack. For example, while centralisation of a data platform may be a practical option at early points on the maturity curve, companies typically experience friction as the demand for data products grows and the central team responsible for building and maintaining the data pipelines through the stack become a bottleneck. Companies can mitigate these frictions by evolving the stack to allow disparate teams to autonomously develop end-to-end (E2E) data products on self-service infrastructure (see mustard coloured arrows in the figure above).

If you’d like to discuss how the modern data stack or data mesh can help you bring data products to life faster or want to share your experiences with either of these technologies, we’d love to hear from you!

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.