tl;dr - we present a new way to generate large quantities of high quality synthetic data which outperforms GPT-4, with better controllability, at a fraction of the cost of prompting LLMs directly.

You can play with a demo here. Repo can be found here.

Introduction

Generating faithful synthetic data in natural language processing (NLP) is critical for diverse applications, yet it remains challenging to produce data that is faithful to the real distribution of the domain. The current state-of-the-art usage of Large Language Models (LLMs) for this purpose often falls short in capturing the authentic variability and complexity of real-world data without significant prompt engineering efforts (Vaselovsky et al., 2023). While these models have lowered the barrier to entry for data generation, their effectiveness is frequently hampered by the difficulty of crafting universal prompts that yield diverse and representative outputs, as well as the prohibitive costs associated with the approach.

In response, we propose a novel approach that diverges from direct reliance on LLMs. Our method centers on geometric sampling within the latent space of embedding models, a technique we argue is more adept at mirroring the statistical distributions inherent to specific domains. This process involves selecting points through geometric manipulations and then decoding them (Morris et al., 2023) to generate synthetic data that better reflects the multifaceted nature of real-world datasets.

Our approach is grounded in the premise that direct sampling from an embedding's latent space, coupled with a sophisticated decoding process, can overcome the limitations of LLM-generated synthetic data. It is particularly advantageous in scenarios where reference data is scarce, biased, or when capturing the domain's intrinsic diversity is paramount. By focusing on the latent space, we aim to produce synthetic data that is not only diverse and contextually rich but also verifiably more aligned with the underlying distributions of authentic data.

Intuition

The geometry of the embedding space

Embedding models (like OpenAI’s ada-002) learn a representation of input text along many (often thousands) of dimensions. Each dimension is continuous in nature, but often representative of complex or otherwise “latent” features — it’s really difficult for us as humans to intuit what each dimension means.

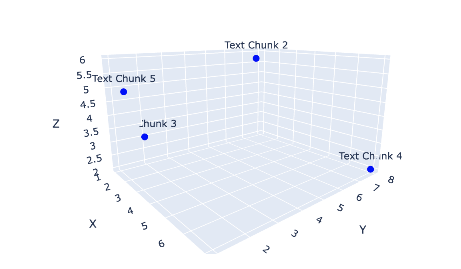

An “embedding” is a vector created by an embedding model that encodes some given text into a series of numbers. The output is a vector which you can loosely think of as a coordinate that lives in the space created by these dimensions. Let’s say we have several pieces of text that are conceptually similar to each other, therefore located near each other in this space:

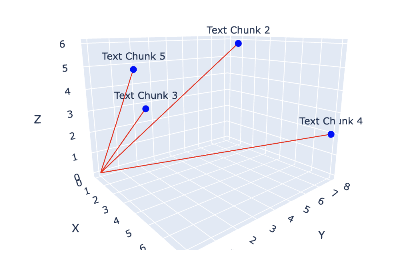

You could try to find out how far each point is from one another in the space to determine their relative similarities, but that often loses a fair amount of meaning as the number of dimensions in the space grows large. Another way you could think about these points is that they each have a direction relative to the origin. You can measure the difference in their directions to determine their degree of conceptual similarity (i.e: cosine similarity).

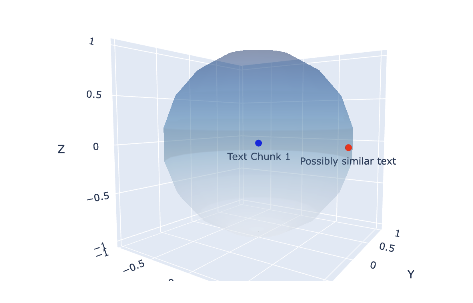

When we embed text using models like OpenAI’s ada-002, we are essentially providing it text and it returns a coordinate in a space that we know very little about - all we know is that the coordinate we were given represents the text, and every coordinate “near” it represents similar text in some way. We don’t know what each dimension means, nor do we know the shape of the space itself. Let’s say that we wanted to pick another point that we felt represents extremely similar text to a given embedding. We might be tempted to pick a point that is a very short distance away in any direction:

This would work just fine if every dimension varied by exactly the same amount in exactly the same way (the formal name for this is that the space is isotropic). But as it turns out, we don’t have a guarantee that an embedding space is isotropic. In fact, isotropy is not something that is generally observed in the embeddings distributions learned by transformers (like OpenAI’s ada-002) (Tyshchuk et. al, 2023). As a result, we may end up picking a point that actually isn’t all that similar to the original data, despite it being close by in euclidean space. This is because the dimension we ended up traveling along to pick our new point has outsized impact on the meaning of the text relative to our original text.

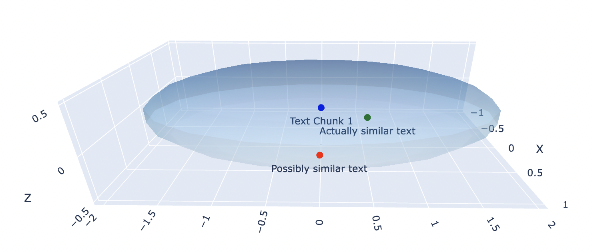

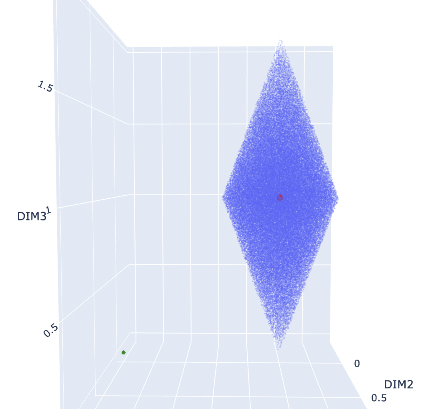

Since we don’t actually know anything about the actual shape of the space itself, we have to account for the anisotropy at a local level. For instance: we can consider our starting text’s direction and use it to orient the way we pick our next point. The intuition is that two embeddings with similar directions have similar core meaning, so as long as we are sampling points in the direction of our initial text, we should end up with points that actually mean roughly the same thing. Previously, we ended up with a sphere just because we made an assumption that we would sample along every dimension equally - but since that’s not the case, we end up with a different looking shape.

We pick the centroid’s direction as the axis of a double-cone (two cones ‘squished’ together at the base) so that we primarily sample along that axis as opposed to sampling blindly in every direction. This way, we can ensure that the sampled points are likely conceptually similar to the original point we picked, and we can tune the angle of the double-cone to account for any embedding space we might find ourselves in. We found that this shape seems to perform well when projected into “real” embedding spaces and seems to align well with human expectations of the output.

Related work

Embedding models are a cornerstone of modern NLP, converting high-dimensional data into a lower-dimensional, dense representation. This latent space captures the semantic relationships between data points, offering a rich ground for exploration and manipulation. Recently, there have been attempts to decode these dense representations back to the original high-dimensional data (Wang et al., 2021; Morris et al., 2023) which we build off of as a way to decouple the sampling and decoding components of the system.

While the embedding is lower dimensional than the input data, it is still “highly dimensional” from the perspective of geometry. Functions we take as trivial in two and three dimensions like shape-bounded sampling and rotations become computationally expensive in n dimensions. We looked to the field of geometric learning (Bronstein et al., 2017; Monti et al., 2017) for inspiration in how best to manipulate latent spaces to approximate non-Euclidean domains. These works delve into techniques for learning and sampling from complex manifolds, informing our approach to selecting representative points within the latent space. By integrating these geometric insights, we aim to improve the efficiency and effectiveness of our sampling process, ensuring that the generated synthetic data is both diverse and faithful to the original distribution.

Our work builds upon a rich body of research in embedding models, geometric learning, high-dimensional sampling, and decoding techniques. By integrating these diverse insights, we aim to advance the state of synthetic data generation in NLP, providing a more robust and versatile tool for researchers and practitioners alike.

Methodology

Our approach revolves around a two-step process: geometric sampling in the latent space of an embedding model and then decoding these points back to text. This approach allows us to systematically explore and generate data that retains the semantic integrity of the original dataset while introducing desired variability.

Embedding Model:

We utilize OpenAI's ada-002 embedding model as our base. This model provides a dense representation of the input data in a latent space, capturing the essential semantic features required for effective manipulation. The choice of ada-002 is due to its robust performance across various NLP tasks and its ability to handle a wide range of data types and structures.

Geometric sampling in latent space:

The core of our methodology is geometric sampling within this latent space. We apply the following techniques to ensure that our sampling is both representative of the underlying data distribution and computationally efficient:

- Shape-bounded sampling: We define geometric boundaries within the latent space to guide our sampling process. This involves identifying regions of interest based on the density and distribution of the original data points, ensuring that our samples are focused on areas likely to yield coherent and meaningful synthetic data. We estimate a centroid and a direction from the provided reference data. We then sample a double-hypercone along the axis of the centroid’s direction to make sure our sampling is both robust to anistropic spaces (i.e: those with inherent local direction) as well as to increase the likelihood of sampling high quality embeddings.

- N-Dimensional Rotations: In order to sample the double-hypercone along the axis of direction, we first initialize the double-hypercone along its last dimension and then rotate it parallel to the calculated direction. This is complex to do in arbitrarily many dimensions (Aguilera et al., 2004; Zhelezov et al., 2017) while maintaining the relative distances and orientations of points and ensuring that the semantic relationships are preserved.

- N-Dimensional translation: We then translate the double-hypercone so that its center, i.e. the center of its circular base, is at the centroid of the embeddings, i.e. the mean of the embeddings.

- Sampling algorithms: We employ advanced sampling algorithms designed for high-dimensional spaces. These algorithms are optimized to balance the trade-off between exploration and exploitation, ensuring that we efficiently cover the space while focusing on the most promising regions.

Formalization

First, given 𝑛 embeddings of dimensions 𝑑, the centroid 𝐶 is given as the mean of the embeddings

\[\begin{aligned}C = \frac{1}{n} \sum_{i=1}^{n} E_i\end{aligned}\]

The height of the optimal double-hypercone is given as ℎ, and calculated as follows:

\[\begin{aligned}&\textbf{Given:} \\ &V : \text{A matrix of embedding vectors in } \mathbb{R}^n \\ &\vec{c} : \text{Centroid vector in } \mathbb{R}^n \\ &p : \text{Desired percentile (0-100)} \\\\ &\textbf{Procedure:} \\ &\text{1. Calculate the norm of centroid:} \\\\ &\quad ||\vec{c}|| = \sqrt{\sum_{i=1}^{n} c_i^2} \\\\ &\text{2. Project each embedding vector onto the centroid:} \\\\ &\quad \vec{P} = \frac{V \cdot \vec{c}}{||\vec{c}||} \\\\ &\text{3. Center the projections around the norm of the centroid:} \\\\ &\quad \vec{C} = | \vec{P} - ||\vec{c}|| | \\\\ &\text{4. Determine the height of the cone:} \\\\ &\quad h = Q_p(\vec{C}) \text{ (the } p^{th} \text{ percentile of } \vec{C}) \\ \end{aligned}\]

The optimal cone-angle is given as 𝛼, and calculated as follows:

\[\begin{aligned}&\textbf{Given:} \\ &V : \text{A matrix of embedding vectors in } \mathbb{R}^n \\ &\vec{c} : \text{Centroid vector in } \mathbb{R}^n \\ &p : \text{Desired percentile (0-100)} \\\\ &\textbf{Procedure:} \\ &\text{1. Calculate the vertex of the cone:} \\\\ &\quad \vec{v} = \vec{c} + h \cdot \frac{\vec{c}}{||\vec{c}||} \\\\ &\text{2. Compute vectors from each embedding point to the cone's vertex:} \\\\ &\quad \vec{E} = \vec{v} - V \\\\ &\text{3. Calculate the cosine of the angle between each vector and the cone's axis:} \\\\ &\quad \cos(\theta_i) = \frac{\vec{E}_i \cdot \vec{v}}{||\vec{E}_i|| \cdot ||\vec{v}||} \\\\ &\text{4. Determine the angles between the embedding vectors and the axis:} \\\\ &\quad \Theta = |\arccos(\cos(\theta_i))| \\\\ &\text{5. Select the } p^{th} \text{ percentile of the angles to define the cone's spread:} \\\\ &\quad \alpha = Q_p({ \Theta, \frac{\pi}{2} - \Theta }) \text{ (the } p^{th} \text{ percentile of combined angle set)} \\\\ \end{aligned}\]

We sample a double-hypercone in 𝑛 dimensions oriented along the centroid direction as primary axis as follows:

\[\begin{aligned}&\textbf{Given:} \\ &V : \text{A matrix of embedding vectors in } \mathbb{R}^n \\ &\vec{c} : \text{Centroid vector in } \mathbb{R}^n \\ &N : \text{Number of points to sample} \\ &h : \text{Height of the hyper-cone} \\ &\alpha : \text{Angle of the hyper-cone from major axis} \\ &D : \text{Distribution type (uniform, normal, or inverse_normal)} \\\\ &\textbf{Procedure:} \\\\ &\text{1. Generate direction factors:} \\\\ &\quad \text{Direction} = 2 \cdot \text{Bernoulli}(0.5, N) - 1 \\\\ &\text{2. Generate heights based on the distribution:} \\\\ &\quad \text{Height} = h \cdot R_{\text{uniform}}(N)^{1/3} \cdot \text{Direction} \\\\ &\text{3. Calculate radii according to the distribution:} \\\\ &\quad \text{If } D = \text{'uniform'}: R = | \text{Height} | \cdot \tan(\alpha) \cdot R_{\text{uniform}}(N)^{1/2} \\ &\quad \text{If } D = \text{'normal'}: R = | \text{Height} | \cdot \tan(\alpha) \cdot | R_{\text{normal}}(N) | \\ &\quad \text{If } D = \text{'inverse_normal'}: R = | \text{Height} | \cdot \tan(\alpha) \cdot | R_{\text{inv_normal}}(N) | \\\\ &\text{4. Sample points on an (n-1)-dimensional hypersphere:} \\\\ &\quad S_i \sim \mathcal{N}(0, I_{n-1}), \quad \text{Normalize: } S_i = \frac{S_i}{||S_i||} \\\\ &\text{5. Scale points to within the radii:} \\\\ &\quad S_i = S_i \cdot R_i \\\\ &\text{6. Construct points with the final coordinate:} \\\\ &\quad P_i = (S_i, \text{Height}i - \text{Direction}i \cdot h) \\\\ &\text{7. Rotate and translate points if necessary:} \\\\ &\quad \text{7.1 Calculate the unit vector along the cone's axis:} \\\\ &\quad \vec{c{\text{norm}}} = \frac{\vec{c}}{||\vec{c}||} \\\\ &\quad \text{7.2 Define a vector representing the original orientation of the cone:} \\\\ &\quad \vec{o} = (0, 0, \ldots, 0, 1)^T \\\\ &\quad \text{7.3 Check if the original axis and the cone's axis are nearly parallel:} \\\\ &\quad \text{If } | \vec{o} \cdot \vec{c{\text{norm}}} | \approx 1 \text{, then no rotation is needed.} \\\\ &\quad \text{Define } \vec{u} = \vec{o} \text{ and } \vec{v} = \vec{c_{\text{norm}}}. \\ &\quad \text{7.4 Calculate the bisector of } \vec{u} \text{ and } \vec{v}: \\\\ &\quad \vec{w} = \frac{\vec{u} + \vec{v}}{||\vec{u} + \vec{v}||} \\\\ &\quad \text{7.5 Compute the rotation matrix using Rodrigues' rotation formula:} \\\\ &\quad R = I + 2 \cdot (\vec{v}\vec{u}^T - \vec{w}\vec{w}^T) \\\\ &\quad \text{7.6 Rotate the points to align with the cone's axis:} \\\\ &\quad P_{\text{rotated}} = P \cdot R^T \\\\ &\quad \text{7.7 Translate the points by the centroid:} \\\\ &\quad {P}_{hypercone} = {P}_{rotated_i} + \vec{c} \\\\ &\textbf{Output:} \\ &\text{ } P_{\text{hypercone}}, \text{ An array of } N \text{ points in } \mathbb{R}^n \text{ within the specified double-hypercone.} \\ \end{aligned}\]

Decoding Process:

Once we have sampled points from the latent space, the next step is to decode these points back into readable text. We leverage a pre-trained decoder/inversion model based on recent advancements in understanding and manipulating text embeddings (Morris et al., 2023). The decoder can be fine-tuned to our specific dataset, ensuring that it accurately reflects the style, tone, and content of the target domain - but we wanted to focus more on the geometric manipulations as the means to achieving higher quality decoded data rather than fine-tuning for the purposes of this paper.

Quality assessment:

To assess the quality of our generated synthetic data, we implement the following measures:

- Similarity/consistency scoring: We utilize BERTScore (Zhang et al., 2019) to evaluate the quality of the generated text relative to the reference dataset. While BERTScore correlates well with human judgement, there is inherent bias in the measure due to reliance on BERT-like pre-trained models. For this paper, we used microsoft/deberta-xlarge-mnli for BERTScoring.

- Diversity scoring: Since we also want to make sure we’re sampling diverse points, we use Self-BLEU (Zhu et. al, 2018) to evaluate the diversity of our generated text. Self-BLEU measures the similarity between different pieces of text generated by the same model; lower scores indicate higher diversity. It's crucial to balance diversity with fidelity, as too much diversity might lead to irrelevant or off-topic generation. We control for this using BERTScoring as above.

Results

We tested using a medical question-answer dataset (you can find the dataset here) to evaluate our approach’s ability to project into domain-specific spaces, and compared our results to the output of prompting GPT-4.

Here are some caveats:

- We tested decoding using a small pre-trained model based on a T5 architecture, and did not fine-tune on the dataset. Meaning: even if the points being sampled are high quality, it’s possible that the model’s ability to produce high quality text from those embeddings may bottleneck.

- We generated very small numbers of samples which would not exceed GPT-4’s context window. Our geometric sampling approach naturally outperforms GPT-4 as generated samples (or reference examples) exceeds the given context window, but we wanted to understand the potential quality trade-off that we would experience in using this approach with no optimization on the dataset or task.

- We utilized BERTScore to evaluate conceptual consistency, instead of using an approach that would simply re-use ada-002’s embedding space. We did this to keep the scoring mechanism independent of the geometric projection (e.g: we could ‘game’ the score by sampling points optimally from the same space used to score), but likely at the cost of overall score quality.

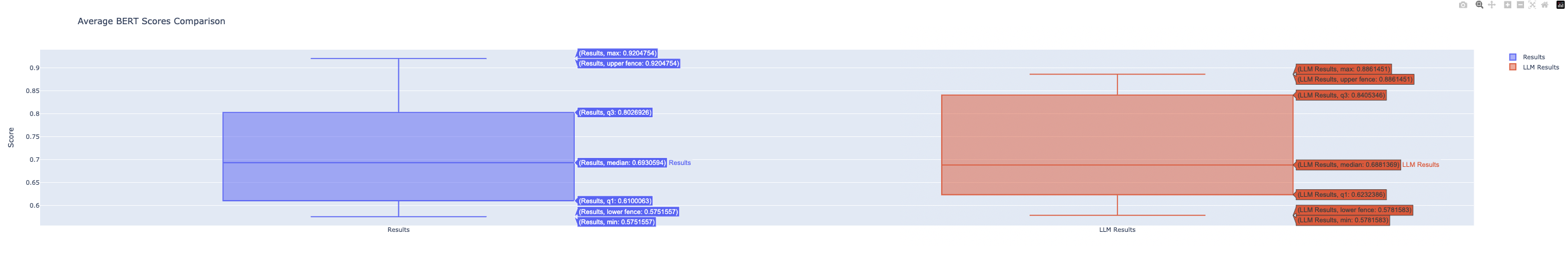

BERTScore

We found that decoding the geometrically sampled points performed comparably to GPT-4 even without fine-tuning, and with a much less capable model. The median BERTScore of the decoded text was higher than that of GPT-4, however GPT-4 produced outputs towards the top-end of its BERTScore range more consistently. We suspect that this outcome can be significantly improved through fine-tuning and by using a larger model for decoding the sampled embeddings.

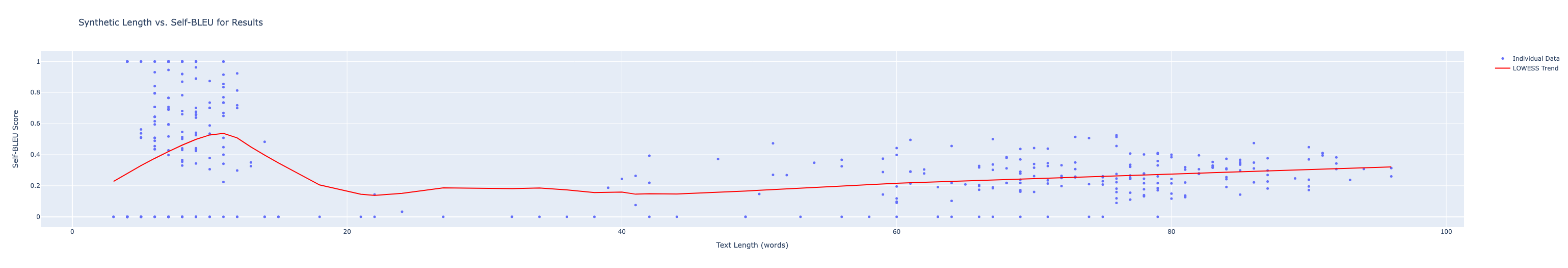

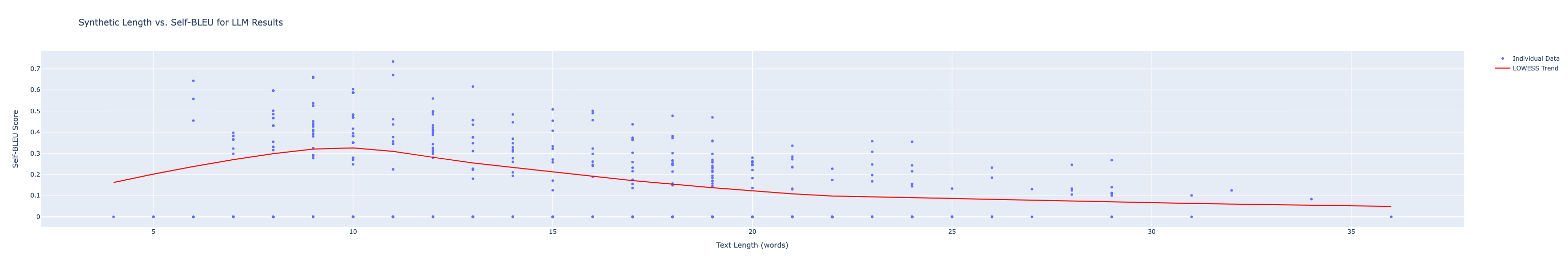

Self-BLEU

On the other hand, we found that the geometric projection approach performed as well as, or perhaps better than GPT-4 in terms of diversity of output. Through geometric sampling & decoding using our pre-trained model, we were able to control diversity of generated data at a very granular level which manifested as we expected in the spread of Self-BLEU scores being significantly smaller when compared to GPT-4’s output. We also noticed that the text produced by decoding the geometrically sampled points tended to produce text that was of a more similar size to the reference set than the text produced by GPT-4 (average length difference was 56 characters vs. 76 from GPT-4).

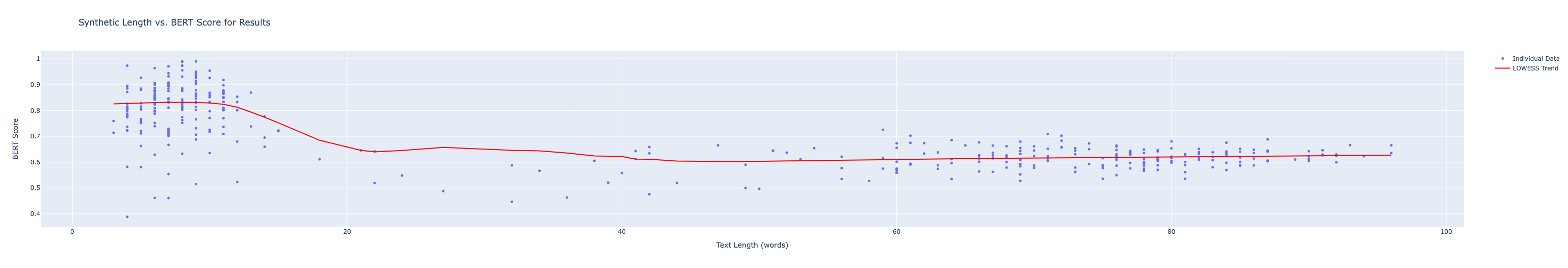

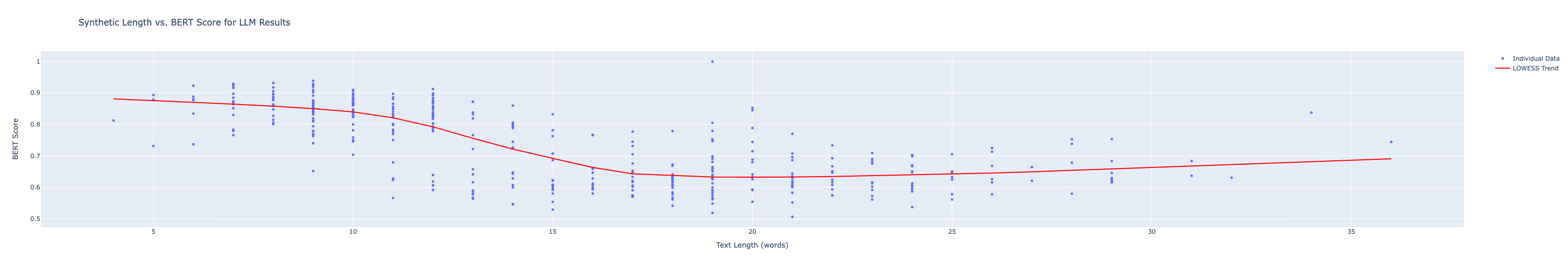

Scores vs. content length

We were also interested in understanding the relationship between the length of generated text and the scores we were using to evaluate quality of the output. There is a theoretical maximum amount of information that these embeddings can encode, and it depends on the purpose the embedding model was designed/trained for. For instance, ada-002 was not optimized for text compression, but rather for search/semantic similarity. In each embedding, there is a finite amount of information encoded because there are finite dimensions with finite precision, so we wanted to understand how quickly we would hit those limitations in practice.

For Self-BLEU, the score results vs. length made some intuitive sense to us. Scores peaked (e.g: text was minimally diverse) as text length was shorter — said another way: words were reused frequently when there was less surface area in the generated text. This was true for both GPT-4 and the geometric projection approach, however GPT-4 consistently decreased Self-BLEU as generated text length increased while our pre-trained decoding model tended to reuse tokens as text length increased. We suspect that this is a model size/pre-training bottleneck rather than a limitation of the approach itself.

The BERTScore vs. length analysis was a little less obvious - both approaches showed a drop in BERTScore at around 15 tokens in generated length and stabilized soon after, with GPT-4 showing a more positive trend line in BERTScore as token lengths increased. That said, this warrants more analysis as it’s possible that BERTScoring has its own quirks relative to text length.

Applications

Using our method of geometric projection, we’re able to achieve outputs that are comparable to GPT-4 at a fraction of the cost, and without the limitations of a finite context window.

1. Data Generation at Scale

LLM-based data generation is limited to available context windows. It’s possible to parallelize prompting to get around this limitation, but when the LLM is not conditioned on the previous generations there is likely to be generated collisions. On the other hand, our approach does not require conditioning a model on previous synthetic examples, and can be massively parallelized while guaranteeing a global statistical distribution of the data.

2. Domain-specific faithful generation

In many real-world tasks (e.g: content moderation, fraud detection, AI testing) it is important to be able to assert that synthetically generated data is faithful to ‘real’ data distributions. This becomes harder to do as available data is scarce, or otherwise domain-specific (which would classically require specialized models to synthesize). Using a geometric projection approach on a well-trained embedding space, it’s possible to find the domain and tune a distribution by hand, marrying human intuition about a domain and the available statistical information to better align outcomes.

Future work

Learning high-dimensional organic shapes

A significant avenue for future research is to move beyond simple geometricboundaries like hyperspheres or hypercones and towards learning the 'right shape' directly from the reference data. This involves developing algorithms that can understand and model the complex, organic shapes inherent in high-dimensional latent spaces. By accurately capturing the true geometry of the data distribution, we can generate synthetic data that is even more faithful and representative of real-world complexities.

Adaptive sampling strategies

Coupled with learning organic shapes, future work will also focus on developing adaptive sampling strategies that can intelligently navigate these learned spaces. This means not just normally, uniformly, or inverse-normally sampling points within a given boundary but understanding the dense and sparse regions of the space, the curvatures, and the ridges that define the underlying structure of the data. By learning where and how to sample, we can ensure that the generated synthetic data covers the full spectrum of variability and specificity present in the original dataset.

Integrating domain-specific knowledge

To further enhance the capability to learn and sample from these high-dimensional organic shapes, we plan to integrate domain-specific knowledge into the learning process. This could involve using insights from the target field to guide the shape learning and sampling process, ensuring that the generated data is not just statistically but also contextually aligned with the needs and nuances of the specific domain.

Conclusion

In this work, we have introduced a methodology for synthetic data generation that leverages geometric manipulations in the latent space of embedding models. A significant contribution of our approach is its model and domain agnosticism; the techniques we've developed can be applied to any existing embedding model and adapted to any domain covered by that model. This universality significantly broadens the applicability and potential impact of our work, offering a flexible and powerful tool for synthetic data generation across diverse fields.

Our approach addresses a critical gap in generating faithful and diverse synthetic data, particularly in specialized domains where reference data is scarce. By employing advanced geometric sampling and sophisticated decoding, we have demonstrated how manipulating latent spaces can more accurately capture the complex distributions of real-world data. This method provides a controlled and nuanced alternative to traditional LLM-based synthetic data generation, enhancing the quality and applicability of the resulting datasets.

This was originally published on the Watchful website and has been republished here as part of Thoughtworks' acquisition of the Watchful IP.

References

- Veniamin Veselovsky, Manoel Horta Ribeiro, Akhil Arora, Martin Josifoski, Ashton Anderson, and Robert West, 2023. Generating Faithful Synthetic Data with Large Language Models: A Case Study in Computational Social Science

- John X. Morris, Volodymyr Kuleshov, Vitaly Shmatikov, and Alexander M. Rush, 2023. Text Embeddings Reveal (Almost) As Much As Text

- Kirill Tyshchuk, Polina Karpikova, Andrew Spiridonov, Anastasiia Prutianova, Anton Razzhigaev, Alexander Panchenko, 2023. On Isotropy of Multimodal Embeddings

- Kexin Wang, Nils Reimers, and Iryna Gurevych, 2021. TSDAE: Using Transformer-based Sequential Denoising Auto-Encoder for Unsupervised Sentence Embedding Learning

- Michael M. Bronstein, Joan Bruna, Yann LeCun, Arthur Szlam, and Pierre Vandergheynst, 2017. Geometric deep learning: going beyond Euclidean data

- Federico Monti, Davide Boscaini, Jonathan Masci, Emanuele Rodolà, Jan Svoboda, Michael M. Bronstein, 2017. Geometric deep learning on graphs and manifolds using mixture model CNNs

- Antonio Aguilera and Ricardo Pérez-Aguila, 2004. General n-Dimensional Rotations.

- Ognyan Zhelezov, 2017. N-dimensional Rotation Matrix Generation Algorithm

- Tianyi Zhang, Varsha Kishore, Felix Wu, Kilian Q. Weinberger, and Yoav Artzi, 2019. BERTScore: Evaluating Text Generation with BERT

- Yaoming Zhu, Sidi Lu, Lei Zheng, Jiaxian Guo, Weinan Zhang, Jun Wang, Yong Yu, 2018. Texygen: A Benchmarking Platform for Text Generation Models

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.