Cloud

Kubernetes: an exciting future for developers and infrastructure engineering

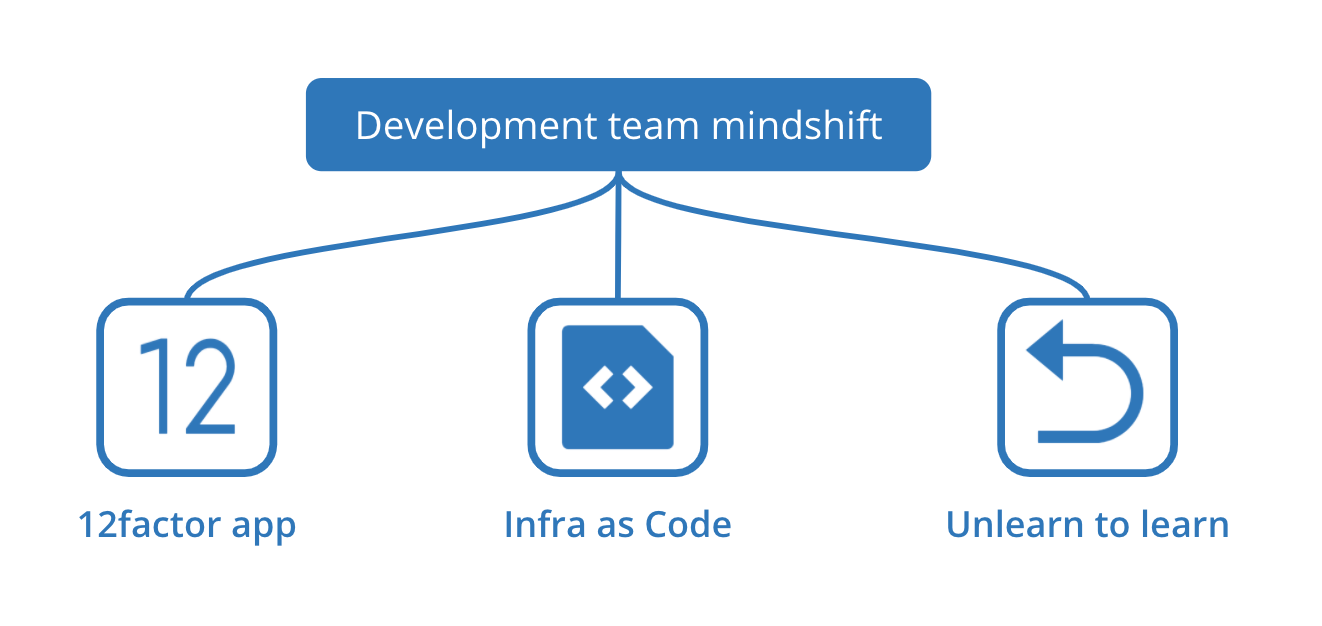

The development team usually faces challenges when running applications on Kubernetes. Our advice is to follow the Twelve-Factor App principles usually leveraged when building cloud native applications

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.