Tl;dr

We’ve been experimenting with Claude Code — a coding assistant recently released by Anthropic — on CodeConcise, a tool we built at Thoughtworks to comprehend legacy codebases.

Adding support for new programming languages is an important, if time consuming, part of developing CodeConcise; we wanted to see if we could use Claude Code to help. Typically, this would take a pair of developers two to four weeks, but Claude Code and an SME took just half a day. However, while initial results were impressive, it isn’t successful all the time, as you’ll see…

What is Claude Code?

Claude Code is an AI coding assistant developed by Anthropic and released on February 24, 2025. It’s an example of what’s being described as a “supervised coding agent.” These are tools that can perform relatively sophisticated tasks in software development workflows, sometimes autonomously.

Most of the best-known supervised coding agents currently available are integrated into workflows via an IDE: they include Cursor, Cline, Windsurf. (GitHub Copilot currently has agentic workflows in preview, but it’s likely that this functionality will be widely available soon.) Claude Code differs from these in that it’s a terminal-based interface (open source agentic tools Aider and Goose also work in the terminal rather than in an IDE). Working through the terminal makes it easier to integrate agents into a wider ecosystem than if you’re limited to an IDE.

Comparing Claude Code to other supervised coding agents

Given the rapid pace of change in this space, it’s important to caution against any comparison of tools by noting that they are all evolving quickly. A snapshot comparison now is likely to be outdated in months — perhaps even weeks. However, there are a few things worth noting:

Claude Code is like Cline and Aider in that it requires an API key to be plugged into an LLM. You’re most likely going to access one of the Claude-Sonnet series models as these are currently the most effective at coding at the moment. By contrast, Cursor, Windsurf and GitHub Copilot are subscription products.

Claude, like Cline and Goose, can integrate with Model Context Protocol (MCP), an open standard for connecting LLMs with sources of data.

Claude’s performance and usefulness at this stage is really a question of how good it is at orchestrating code context, prompting the model, and integrating with other context providers.

| Interface | LLM connection | |

Claude Code |

Terminal |

API key / pay what you use |

Aider |

Terminal |

API key / pay what you use |

Goose |

Terminal |

API key / pay what you use |

Cursor |

IDE |

Monthly subscription (with some limits), or pay what you use with API key |

Cline |

IDE |

API key / pay what you use |

Windsurf |

IDE |

Monthly subscription (with some limits) |

Why were we interested in Claude Code?

We’re interested in AI-assisted coding and agentic AI; naturally, Claude Code’s release caught our attention.

While the range of potential use cases is huge, specifically we wanted to see if Claude Code could help us with a particular challenge we have been encountering in the development of our generative AI code discovery and intelligence tool CodeConcise: adding support for new languages.

So far, support has only been added as and when it’s needed. However, we had a hypothesis that expanding support to the majority of programming languages upfront could greatly improve the effectiveness of CodeConcise. This isn’t just a question of technical effectiveness: if we spend less time building support for a language in CodeConcise, we can also spend more time doing other value-adding work.

However, building support isn’t straightforward — we thought Claude Code might be able to help. Given experiments coincided with the release of Claude Code, we promptly tried it out...

Some technical background on CodeConcise

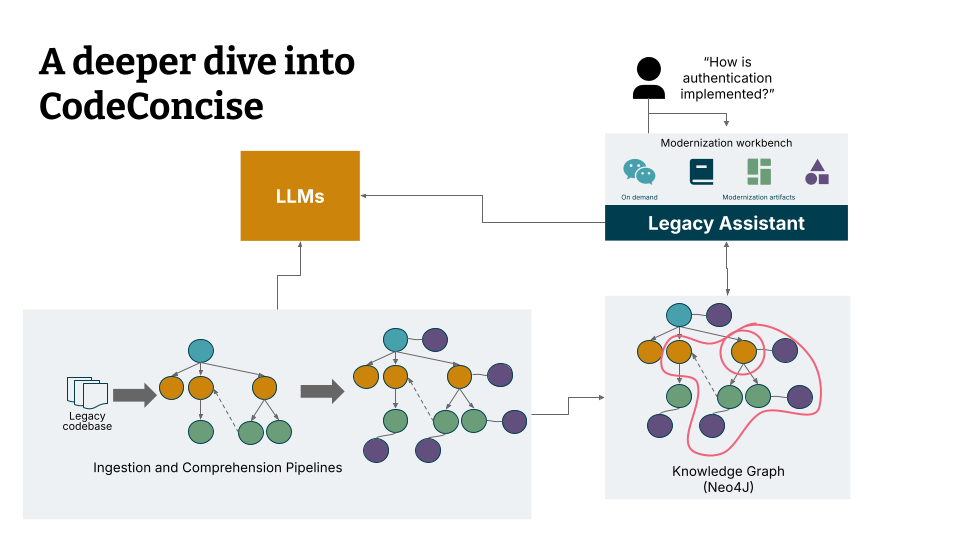

To understand how we were experimenting with ClaudeCode, it’s worth getting a bit of background on how CodeConcise works. CodeConcise makes use of abstract syntax trees (AST) to not only break apart codebases and extract their structure, but also to navigate those codebases when an LLM is used to summarize and explain them.

More specifically, CodeConcise requires language specific, concrete implementations of an abstract class known in the code as an IngestionTool. This implementation is designed to return a list of nodes and edges extracted from the code which can then populate the knowledge graph. So far, our own implementations make use of ASTs to achieve this. Subsequently, we have language agnostic traversals of this graph structure which enriches the graph with the information that’s extracted (using an LLM) from the code. This knowledge graph is then made available to the app that uses an agent and GraphRAG to provide insights from the code to users.

Although LLMs can be a very powerful tool for analyzing a codebase, one of the downsides is that whenever we want to support a new programming language — and extract relevant parts from its AST — we need to write new code in order to produce and interpret this tree. This takes time; typically two to four weeks. We have to find relevant codebase examples, build a suite of automated tests, and then make the changes to the codebase to support the new language. This is the main reason we’ve only done it when we’ve needed to.

What’s worked well with Claude Code so far?

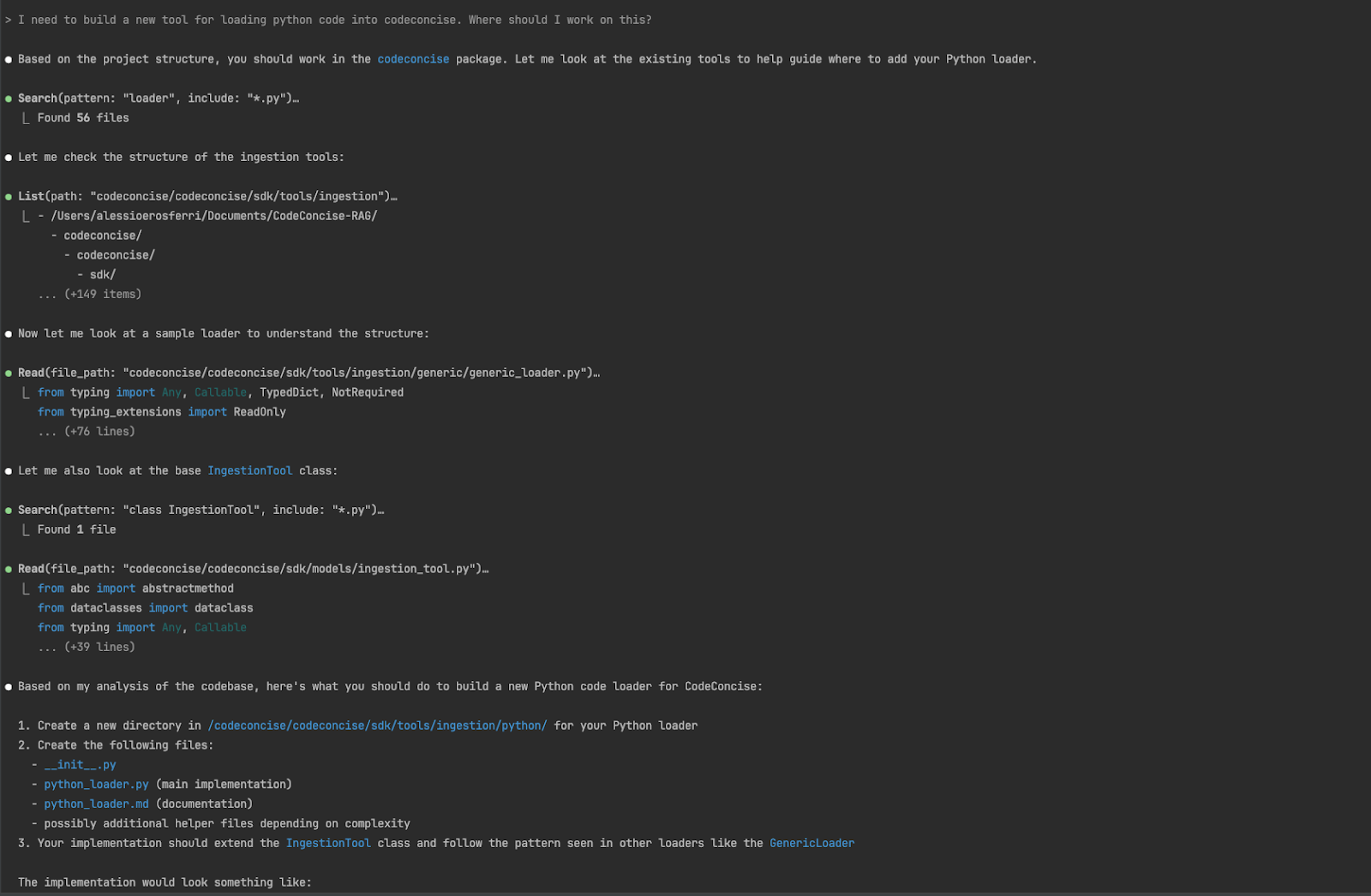

Success: Asking ClaudeCode to identify the necessary changes

First up, we asked Claude Code for guidance on the changes we’d need to implement to add support for Python in CodeConcise. This question might be pretty straightforward for a developer who has worked on the codebase for a few weeks, but it’s really valuable if you’re completely new to it.

Having access to the code and the documentation that lives next to it, Claude Code produced astonishing results. It accurately identified all the changes needed to support Python. Additionally, the code suggested at the end demonstrates the agent hasn’t only inspected the other ingestion tools we developed in the past, but also considered the patterns we’ve used to implement them for the new code we were inquiring about.

Limited success: Asking Claude Code to implement the necessary changes to CodeConcise

Our second prompt to Claude Code was to go ahead and actually implement the suggested changes by itself:

I need to build a new tool for loading python code into CodeConcise. Please do this and test it.

It took a little more than three minutes of autonomous work. All the changes were implemented locally, including tests. All the tests suggested by Claude Code passed, but as we used CodeConcise to load its source code onto a knowledge graph and run our own end to end tests, we identified a couple of problems:

The filesystem structure was not part of the graph itself

The edges connecting nodes did not conform to the model we have in CodeConcise. For example, call dependencies were missing (subsequent parts such as the comprehension pipeline would not be able to traverse the as we would expect)

The importance of feedback loops in AI-assisted coding

This experiment is a good reminder of just how important it is to have multiple feedback loops when AIs are used to help us write code. If we didn’t have tests to validate the integration actually worked we wouldn’t have discovered this until much later. This could have been both disruptive and costly; both the developer and the agent would have lost context on the work in progress.

After providing this feedback to Claude Code, we waited a few seconds to see the code being updated. A first glance at the code produced shows how well this agent was able to follow patterns in the code, such as the use of Observers to create the file system structure as the code is being parsed.

It’s worth noting that for this specific use case much of the complex thinking had already been done by the developers who architected the tool. They had already made the decision to separate domain core logic and the somewhat more repetitive implementation details required to support the parsing of a new language. All Claude Code had to do was put together this information and understand — from the existing design — what needed to be built which was language-specific.

What did this teach us?

Despite how well-architected the existing solution may be it takes (on average) a pair of developers and an SME two to four weeks to build support for a new language. Having an SME and Claude Code working together took just a few minutes to produce the code for this specific use case, and a handful of hours to validate it.

What didn’t work?

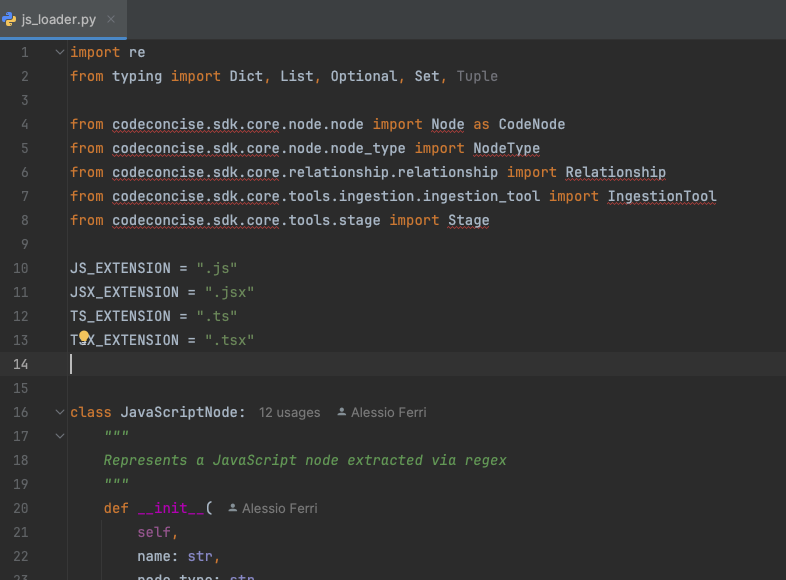

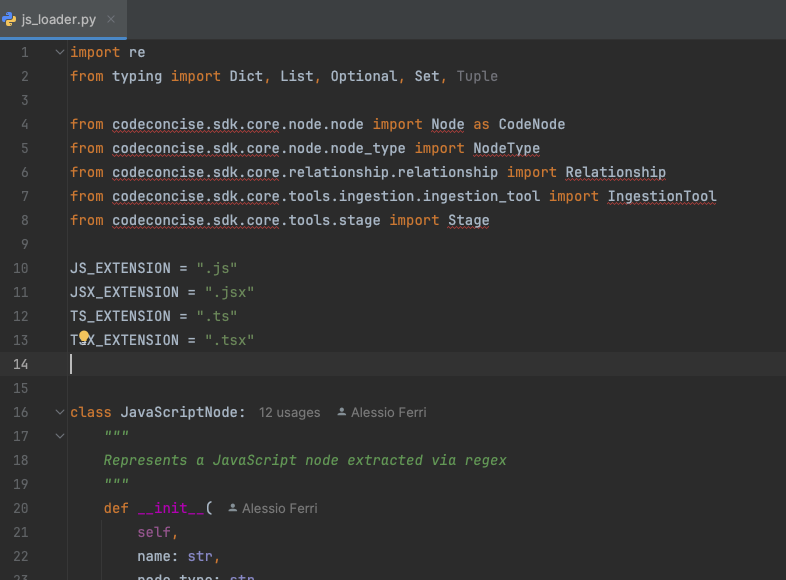

Complete failure: Asking Claude Code to help us add JavaScript support

We were pretty pleased with the results of our experiment, so we tried the same approach for JavaScript:

I want you to add an ingestion tool for javascript. I already have put the lexerbase and parserbase in there for you to use. Please use the stageobserver like in other places and use the visitor pattern for the lexer and parser like it is done in the tsql loader.

The first time it tried to implement this using the ANTLR grammar for JavaScript. We couldn’t get the grammar to work (which is beyond the scope of both Claude Code and CodeConcise), so we couldn’t verify the code actually worked.

The second time, we prompted Claude Code and asked it to use treesitter instead. We felt our luck was running low, it started using libraries that did not exist. Again, we could not verify the generated code.

The third and final time for this series, we asked it to try another approach. It decided to use regex pattern matching to parse the code.

So, did it work? No: the code referenced internal packages that don’t even exist.

Evidently, the agent lacks a stronger verification mechanism for the code it produces. It’s as if it misses the feedback that a simple unit test could provide. This isn’t something new; in fact, we’ve observed this plenty of times with other coding assistants.

We wondered if we had gotten lucky with the Python work it did the first time round, so we asked it to do the same for C. The results were pretty much the same as for Python, although the approach it followed was to use regular expression matching instead of a more reliable, AST-driven approach.

What did this teach us about Claude Code and coding agents?

The experiment outlined above addressed a very specific use case, so it’s important not to draw conclusions that aren’t warranted. However, there are still some important takeaways that are worth sharing:

Outputs can be very inconsistent. Claude Code produced mind blowing outcomes when asked to implement the Python and C ingestion tools, but gave very poor results when asked to do the same for JavaScript.

Coding assistants’ outcomes depend on multiple factors:

Code quality. What we have always considered to be important for humans in terms of good quality code, it also seems to be the case for agents. This means that when an agent works with well-written, modular clean code that has been designed with separation of concerns and some documentation, we maximise the opportunity it will produce good quality outputs.

Library ecosystem. Was building the Python parser into CodeConcise easier because Python (the language CodeConcise is built on) already provides a standard module for converting Python code into ASTs? Our hypothesis is that while agents require a good set of tools to act upon the decisions they take, the quality of their output also depends on whether they can solve the problem using well-designed, widely established libraries.

Training data. LLMs, and, by extension, AI agents, are known to produce better code on a programming language for which plenty of data is available as part of its original training dataset.

Large language models. As agents are built on top of large language models, their performance is directly affected by the underlying performance of the models they use.

The agent itself. This includes prompt engineering, workflow design and the tools at its disposal.

Human pairs. It’s often the case that when we see people and agents working together we get the best of both worlds. Also, developers experienced with agents often know tricks that can guide them through the work and ultimately produce better outcomes.

Claude Code shows a lot of promise, and it's exciting to see another supervised coding agent, particularly one that can be used in the terminal. Claude Code will certainly evolve — like the rest of the landscape — and we’re looking forward to exploring how we can continue to integrate them into the work we do.

Special thanks to Birgitta Böckeler for her support and guidance with our experiments and this piece.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.