Have you ever tried solving a difficult problem with no clear path forward? Perhaps it’s a problem that may or may not be well understood or it’s a problem with many ideas of things that might work, and you are facing this without an approach to guide you. We've been there and lived this very scenario and will take you through an approach we've found to be very effective.

As Donald Rumsfeld once said, the problems we solve everyday can be classified into four categories:

“There are known knowns; there are things we know we know. We also know there are known unknowns; that is to say we know there are some things we do not know. But there are also unknown unknowns — the ones we don’t know we don’t know.”

Problems can be classified into four categories.

When working on problems with little data and high levels of risks (i.e. the “known unknowns” and “unknown unknowns”), it’s important to focus on finding the shortest path to the ‘correct’ solutions and removing the ‘incorrect’ solutions as soon as possible. In our experience, the best approach for solving these problems is to use hypotheses to focus your thinking and inform your decisions with data, which is known as: data-driven hypothesis development.

What is data-driven hypothesis development?

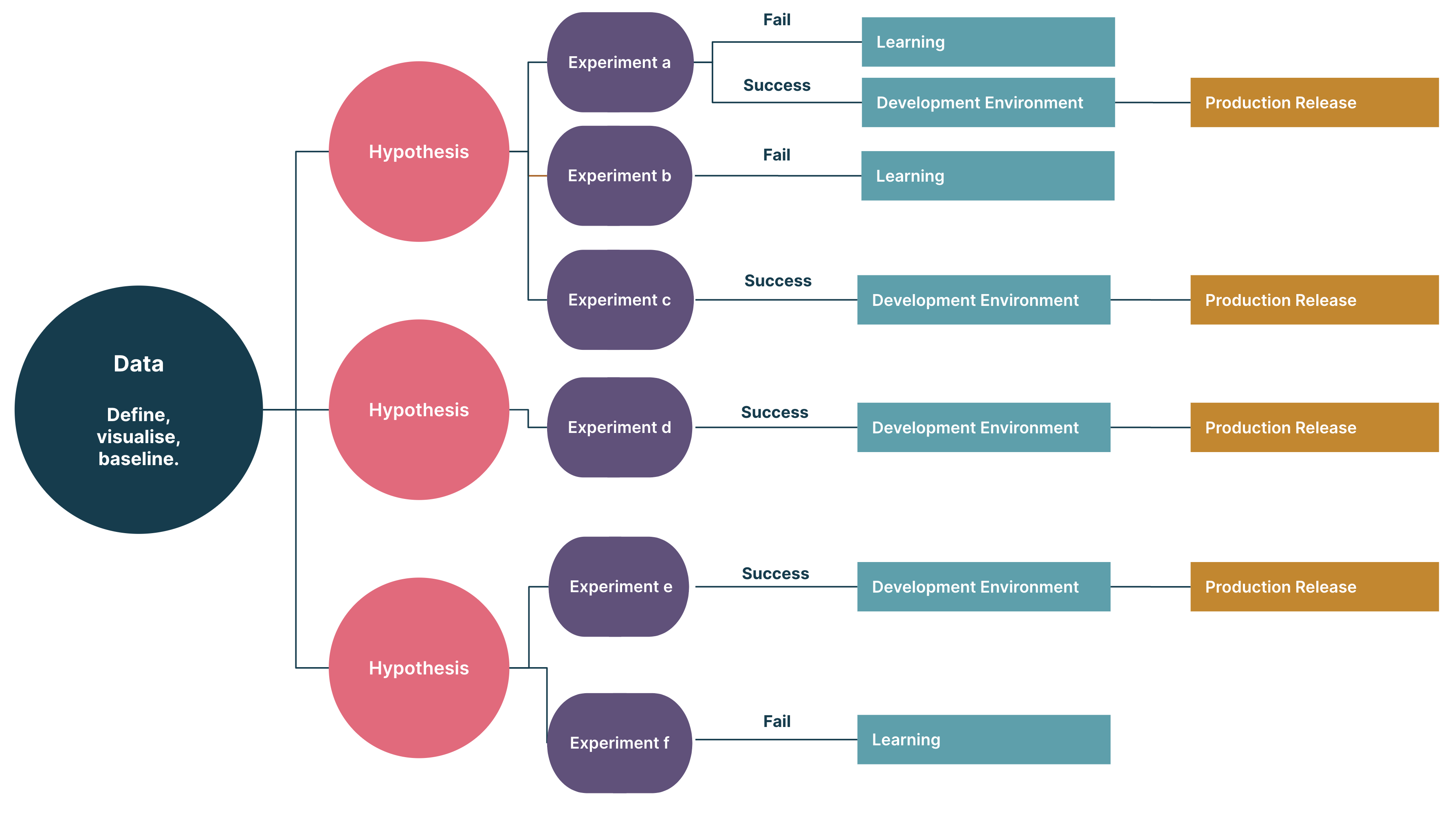

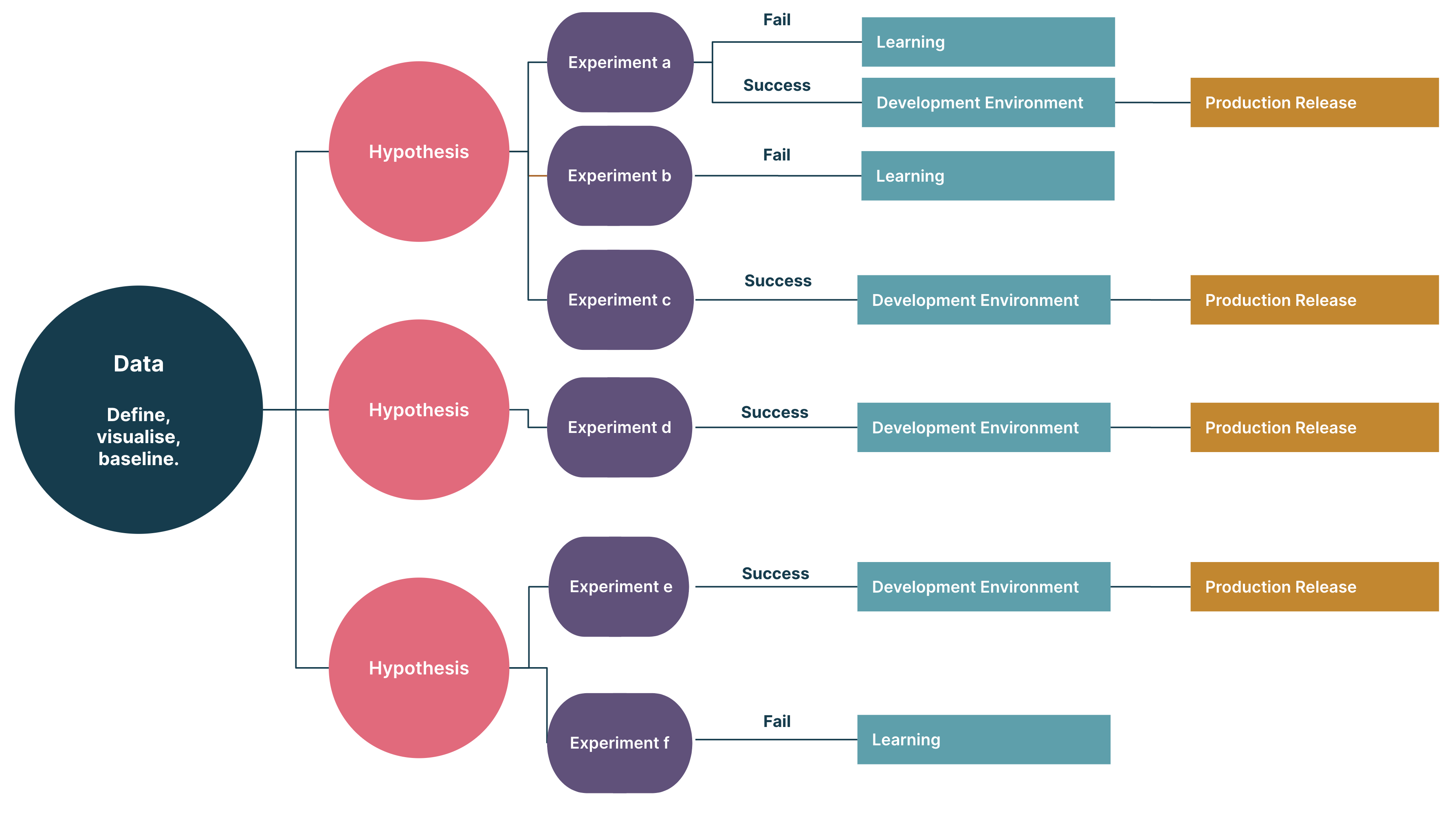

Data-driven hypothesis development (DDHD) is an effective approach when facing complex “known unknown” and “unknown unknown” problems. There are four steps to the approach:

1. Define the goal using data

Problem solving starts with well defined problems, however, we know that “known unknowns” and “unknown unknowns” are rarely well defined. Most of the time, the only thing we do know is that the system is broken and has many problems; what we don’t know is which problem is critical to help us achieve the strategic business goal.

The key is to define the problem using data, thus bringing the needed clarity. State the problem, define the metrics upfront and align these with your goals.

Setup a dashboard to visualize and track all key metrics.

2. Hypothesize

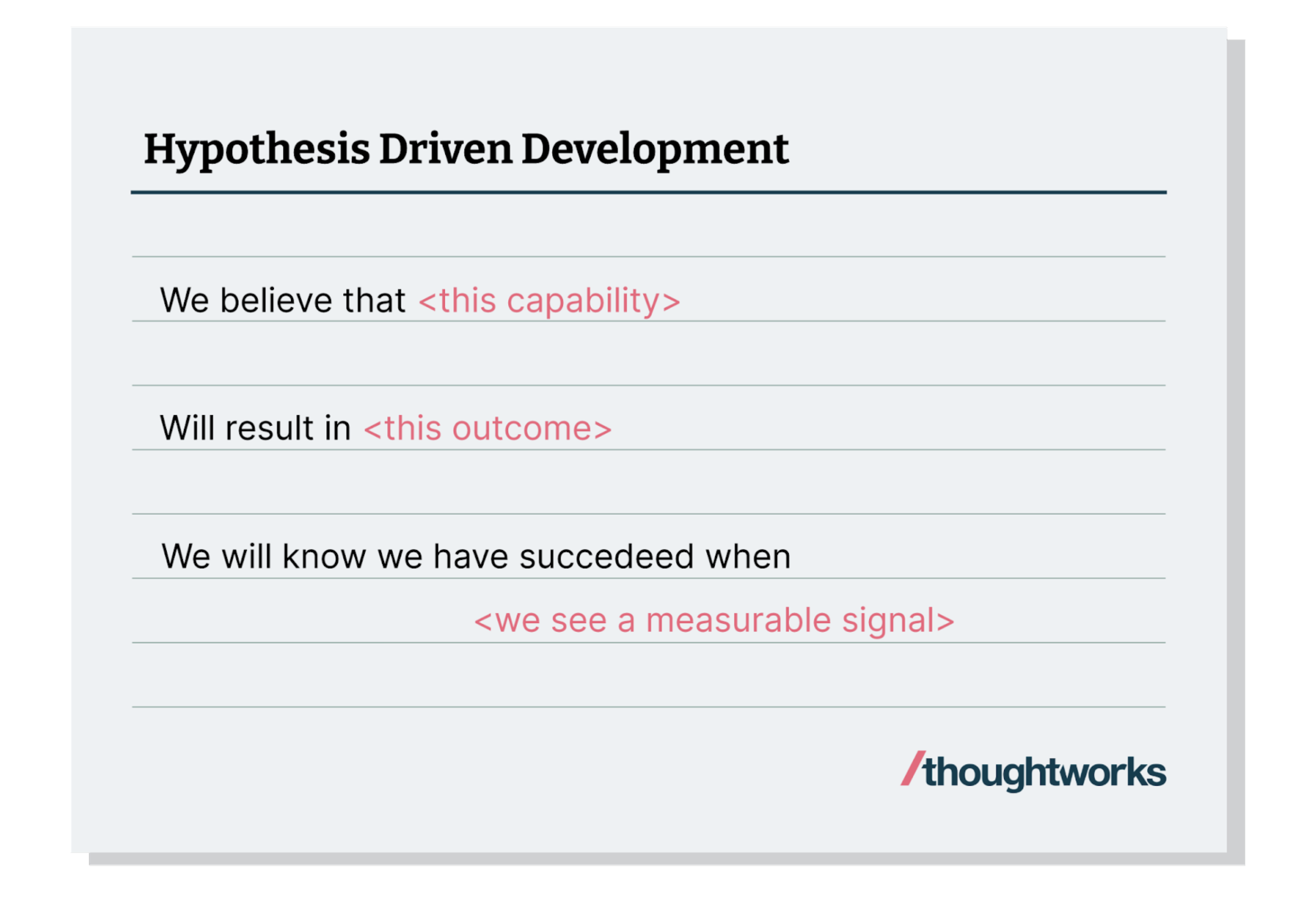

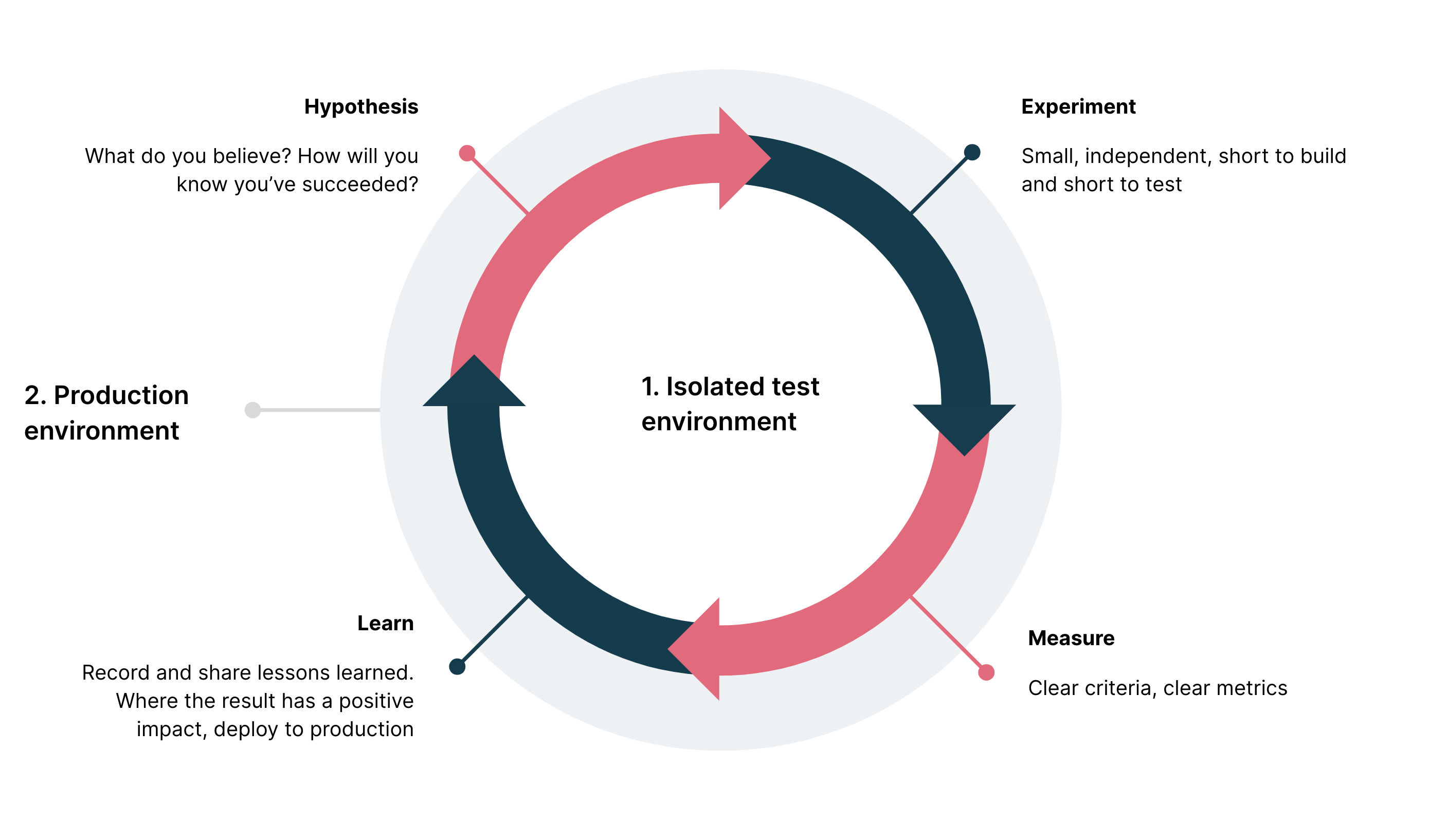

Hypotheses are introduced to create a path to the next state. This requires a change in mindset; proposed solutions are viewed as a series of experiments done in rapid iterations until a desirable outcome is achieved or the experiment is proved not viable.

One hypothesis is made up of one or many experiments. Each experiment is independent with a clear outcome, criteria and metrics. It should be short to build and short to test and learn. Results should be a clear indicator of success or not.

If the result of the experiment has a positive impact on the outcome, the next step would be to implement the change in production.

If an experiment is proved not viable, mark it as a failure, track and share lessons learned.

Capability to fail fast is pivotal. As we don’t know the exact path to the destination, we need to have the ability to quickly test different paths to effectively identify the next experiment.

Each hypothesis needs to be able to answer the question: when should we stop? At what point will you have enough information to make an informed decision?

3. Fast feedback

Experiments need to be small, specific, so that we can receive feedback in days rather than weeks. There are at least two feedback loops to build in when there is code change involved:

An isolated testing environment: to run the same set of testing suites to baseline the metrics and compare them with our experiment’s results

The production environment: once the experiment is proven in the testing environment it needs to be further tested in a production environment.

Fast feedback delivered through feedback loops is critical in determining the next step.

Fast feedback requires solid engineering practices like continuous delivery to accelerate experimentation and maximize what we can learn. We call out a few practices as an example, different systems might require different techniques:

Regression testing automation: for an orphaned legacy system, it’s important to build a regression testing suite as the learning progresses (have a baseline first then evolve as you go), providing a safety net and early feedback if any change is wrong.

Monitoring and observability: monitoring is quite often a big gap in legacy systems, not to mention observability. Start with monitoring, you will learn how the system is functioning, utilizing resources, when it will break and how it will behave in failure modes.

Performance testing automation: when there’s a problem about performance, there is a need to automate the performance testing so you can baseline the problem and continuously run it with every change.

A/B testing in production: set up the system to have basic ability to run the current system and the change in parallel; and rollback automatically if there is a need.

4. Incremental delivery of value

The value created by experiments, successful and failed, can be divided into three categories:

Tangible improvements on the system

Increased understanding of the problem and more data-informed decisions

Improved understanding of system via documentation, monitoring, test and etc.

It’s easy to take successful experiments as the only value delivered. Yet in the complex world of “known unknowns” and “unknown unknowns”, the value of “failed experiments” is equally important, providing clarity in decision making.

Another often ignored value delivered is the understanding of the problem/system, using data. This is extremely useful when there’s heavy loss of domain knowledge, providing a low cost, less risky way to rebuild knowledge.

Data-driven hypothesis development enables you to find the shortest path to your desired outcome, in itself delivering value.

Data driven hypothesis development approach

When facing a complex problem with many known unknowns and unknown unknowns, being data-driven serves as a compass, helping the team stay focused and prioritizing the right work. A data-driven approach helps you deliver incremental value and let’s you know when to stop.

Why we decided to use data-driven hypothesis development

Our client presented us with a proposed solution — a rebuild —- asking us to implement their shiny new design, believing this approach would solve the problem. However, it wasn’t clear what problem we’d be solving by implementing a new build, so we tried to better understand the problem to know if the proposed solution was likely to succeed.

Once we looked at the underlying architecture and discussed how we might do it differently, we discovered there wasn’t a lot we would change. The architecture at its core was solid, however, there were just too many layers of band-aid fixes. There was low visibility, the system was poorly understood and it had been neglected for many years.

DDHD would allow us to run short experiments, to learn as we delivered incremental value to the customer, and to continuously apply our lessons learned to have greater impact and rebuild domain knowledge.

Indicators data-driven hypothesis development might work for you

No or low visibility of the system and the problem

Little knowledge of the system exists within your organization

The system has been neglected for some time with band-aids applied loosely

You don’t know what to fix or where to start

You want to de-risk a large piece of work

You want to deliver value incrementally

You are looking at a complete rebuild as the solution

Our approach

1. Understand the problem and explore all options

To understand all sides of the problem, consider the underlying architecture, the customer, the business, and the issues being surfaced. One activity we ran recorded every known problem, discussing what we knew or didn’t know about it. This process involved people outside the immediate team. We gathered anyone who might have some knowledge on the system to join the problem discussion.

Once you have an understanding of the problem, share it far and wide. This is the beginning of your story; you will keep telling this story with data throughout the process, building interest, buy-in, support and knowledge.

The framework we used to guide us in our problem discussion.

2. Define the goals using data

As a team, define your goals or the desired outcomes. What is it you want to achieve? Discuss how you will measure success. What are the key metrics you will use? What does success look like? Once you’ve reached agreement on this, you’ll need to set about baselining your metrics.

We used a template similar to the one above to share our goals and record the metrics. The goals were front and center in our daily activities, we talked about them in stand-up, included them on story cards and shared them in our showcases, helping to anchor our thoughts and hold our focus. In an ideal world, you’ll see a direct line from your goal through to your organization's overarching objectives.

3. Hypothesize

One of the reasons we were successful in solving the problem and delivering outstanding results for our client was due to involving the whole team. We didn’t have just one or two team members writing hypotheses, defining, and driving experiments - every single member of the team was involved. To set your team up for success, align on the approach and how you’ll work together. Empower your team to write hypotheses from day one, no matter their role.

We created templates to work from and encouraged pairing on writing hypotheses and defining experiments.

4. Experiment

Run small, data-driven experiments. One hypothesis can have one or many experiments. Experiments should be short to build and short to test. They should be independent and must be measurable.

5. Conclude the experiment

Acceptance criteria plays a critical role in determining whether the experiment is successful or not. For successful experiments, we will need to build a plan to apply the changes. For all experiments, successful or not, you should revisit other remaining experiments with the new data you have collected and change accordingly upon completion. This could mean updating, stopping or creating new experiments.

Every conclusion of an experiment is a starting point of the next step plan.

6. Track the experiment and share results

Use data to tell stories and share your lessons learned. Don’t just share this with your immediate team; share your lessons learned and data with the business and your stakeholders. The more they know, the more empowered they will feel too. Take people on the journey with you. Build an experiment dashboard and use it as an info radar to visualize the learning.

Key takeaways

Our key takeaways from running a DDHD approach:

Use data to tell stories. Data was key in all of this. We used it in every conversation, every showcase, every brainstorming session. Data helped us to align the business, get buy-in from stakeholders, empower the team, and to celebrate wins.

De-risk a large piece of work. We were asking our clients to trust us to fix the “unknown unknowns” over implementing a shiny new solution. DDHD enabled us to deliver incremental value, gaining trust each week and de-risking a piece of work with a potential 12 - 24 month timeframe and equally big price tag.

Be comfortable with failure. We encouraged the team to celebrate the failed experiments as much as the successful ones. Lessons come from failure, failure enables decision making and through this we find the quickest path to the desired outcome.

Empower the team to own the problem and the goals. Our success was a direct result of the whole team taking ownership of the problem and the goals. The team were empowered early on to form hypotheses and write experiments. Everytime they learned something, it was shared back and new hypotheses and/or experiments were formed.

Deliver incremental parcels of value. Keep focussed on delivering small, incremental changes. When faced with a large piece of work and/or a system that has been neglected for some time, it can feel impossible to have an impact. We focussed on delivering value weekly. Delivering value wasn’t just about getting something into the customers’ hands, it was also learning from failed experiments. Celebrate every step, it means you are inching closer to success.

We’ve found this to be a really valuable approach to dealing with problems that can be classified as ‘known unknowns’ and ‘unknown unknowns’ and we hope you find this technique useful too.