5 Steps to Continuous Measurement

Software development is complex, expensive and time-consuming. Every business wants to get the highest return on projects, yet success remains typically grounded in meeting one’s schedule, scope and budget. We argue that different metrics, focused on the business outcomes of the delivered software, are more realistic measures of success.

Over the last year, we have worked closely with a number of clients to explore different methods of measuring success based on outcomes over output. Outcomes and output are both important, albeit to measure different things. Output is a productivity measure, and outcome is a business measure. We experimented with methods to embed regular quantitative and qualitative measurement into the software development process to measure money earned rather than just story points. We regularly defined and used different business metrics at a story level to get fast feedback against initial goals. Business metrics were also used at a macro level for project governance.

We’re measuring it wrong

Often, we find a project is deemed successful if it delivers all features on time and on budget. However, is a project still successful if it delivered minimal business value? Studies have shown that more than 50% of functionality in software is rarely or never used. That is potentially 50% of resources wasted. Going back to original question, does it matter if something was delivered on time if it won’t be fully utilized?

IT projects regularly focus too heavily on their constraints instead of the value they are delivering. Scope, schedule and costs are easily understood and calculated, but benefits, if measured at all, are usually broad and non-specific. For example, project teams regularly report velocity and burn-up to stakeholders instead of the value they are delivering. Constraints are important, and teams should track them on a regular basis, but they shouldn’t be a measure of success.

We realized that to deliver business value, we need to rethink our approach to metrics. Here are five steps we took on our journey to Continuous Measurement:

#1 Change the Definition of “Done”

In order to measure a project’s success based on the value it delivers instead of its constraints, we had to challenge our established way of working. Traditionally, a feature is considered complete when it has passed all testing and is in production. We questioned this approach and did not count a feature as complete until we had measured its outcomes and learnt from it.

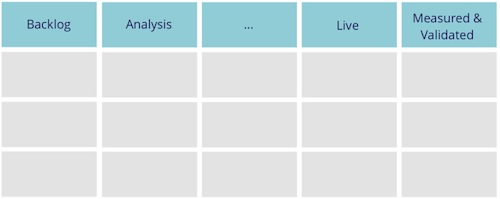

To implement this, we extended our Agile Story Wall and put a column labelled “Measured and Validated” to the right of the “Live” column, which is typically the end of the lifecycle. Adding this to the story wall meant the story was visibly incomplete until we had measured the effect of the feature. Therefore, when a story went live, it was still not complete on the story wall until it had been measured. Consequently, the team became focused on the outcome the story was delivering and not just getting the story live. The whole mindset shifted from delivering features to delivering measurable outcomes.

#2 Use Hypothesis Driven Development

By changing the definition of done, our efforts evolved from delivering what stakeholders thought was the highest priority story, to an experiment to see if the feature delivered value or not. However, the next problem we encountered was validating the story’s value against the initial goals and purpose.

Hypothesis Driven Development solved this problem. Hypothesis development is derived from the scientific method. For every experiment, a person must make a hypothesis of what is expected to happen, based on research and findings. Afterwards, the experiment’s outcomes are measured against the initial hypothesis to see if it was correct or not.

We adopted a new User Story template to represent the story now being an experiment. Initially, the most common user story template was:

As a <type of user>,

I want <some goal>,

so that <some reason>.

However, a user story template to support Hypothesis Driven Development would be:

We believe that <this capability>

Will result in <this outcome>;

We know we have succeeded when <we see this measurable signal>.

Capability represents what feature we will develop. Outcome refers to the specific business value expected by building the feature. Measurable signal include the indicators that will illustrate whether the feature built has met the outcome expected. These are qualitative or quantitative metrics that will test the hypothesis in a defined time period.

Hypothesis and measurable signal are determined based on existing business data, persona-driven research, user testing, domain expertise, market analysis and other information. Some examples are:

| Capability | Outcome | Measurable Signal |

|---|---|---|

| Moving the filter bar to the top of the search results | Increased customer engagement | Usage of the filter bar increases by 5% within 5 days. |

| Adding more details link to product page | Better communication with customers | 1% increase in conversion |

Each story was measured, once it went live, to gauge its performance against the measurable signal. The results fed back into our product development cycle and influenced future hypotheses and remaining priorities. If a particular change in one part of our application produced unexpected results, we could apply that new real-world data point to other parts.

#3 Shorten your Feedback Cycle

Continuously measuring at a story level against defined hypotheses enabled a fast feedback cycle and quick learning. If a story under-performed against the hypotheses, it either went back into the pipeline to be improved (based on our new learning) or was rolled back. All learning that arose from this process, positive or negative, was critical to the formation of new hypotheses, subsequent story creation, and prioritisation.

Measuring business outcomes gives a development team a foundation for “failing fast” when a hypothesis doesn’t measure up to expectations. By constantly measuring the impact of stories, a team can quantify trends and determine the point at which decreasing return on investment means a project should pivot.

#4 Focus on business-oriented outcomes

As a result of this continuous measurement process, the development team’s focus is shifted from delivering software to a particular specification to delivering business oriented outcomes. This fundamentally aligned software delivery with business strategy and objectives.

Reporting the business measures and outcomes of the stories creates a shared understanding and improved communication among development team members. Specifically, they are able to more effectively communicate what has been delivered. Additionally, business executives can now understand the benefit of what has been delivered and become an advocate for IT.

#5 Implement Continuous Delivery, Design and Measurement

Continuous Delivery infrastructure and the Continuous Design processes enable teams to measure quickly and respond to new insights quickly. Continuous Delivery gives teams the ability to deliver frequently and get fast feedback at the push of a button. Continuous Design is the process of regular improvement and evolution of a system as it is developed, rather than specifying the complete design before development starts.

While Continuous Delivery lets us rapidly release features, Continuous Design enables us to iteratively adapt the design. Combining these with Continuous Measurement evolves software delivery. Continuous Measurement and learning is the missing link to this powerful combination, as it enables us to ensure we are building software that meets the business goals.

Continuous Measurement can also be applied to track macro-level progress towards key performance indicators. This is similar to a traditional burn-up chart, which tracks story points to a target. Knowing that business outcomes are important, as opposed to story points, we decided to focus the burn-up towards our key goal. As an example, if the overall goal of the project is to increase conversion, a burn-up chart could be reported on an iterative basis, illustrating the progress towards the project goal.

This adjusted burn-up chart, showing progress in business terms, is more relevant and understandable across the organisation. The concept can be applied to any sector or industry, using different metrics that are relevant to the business situation and project goal.

The Continuous Measurement approach discussed is applicable for existing software applications, where one can use Continuous Delivery and design to get new features live as soon as possible. This enables Continuous Measurement of the outcomes against the hypotheses.

For a greenfield software project, other techniques (e.g., usability testing) are available to measure potential outcomes and validate hypotheses before the first release. New learning may lead to hypotheses of higher value stories to pursue, or may lead the team to “fail fast” without further investment, cutting losses as compared to a lengthy period of analysis. In any case, measures should be applied at a granular level over the course of the project and not only at the end.

In Summary

For software projects to be deemed successful, it is important to measure the business impact that the software has achieved and not just use traditional measures of schedule, scope and budget. In order to do this, Continuous Measurement should be an integral part of the software development process.

Each story should have an expected outcome that can be measured and validated within a certain time period. Validating outcomes will generate new insights, which should be incorporated into a fast feedback cycle and influence future development. Tracking the success of the project can be achieved by introducing macro-level burn-up towards key business performance indicators.

This process results in a shared understanding that will shift the focus to align software delivery with business strategy and objectives. Combining Continuous Design and Delivery with Continuous Measurement allows software projects to take a more outcome-focused approach that ensures business goals are not only met, but are also quantified.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.